IBM Predicts Artificial Intelligence Future

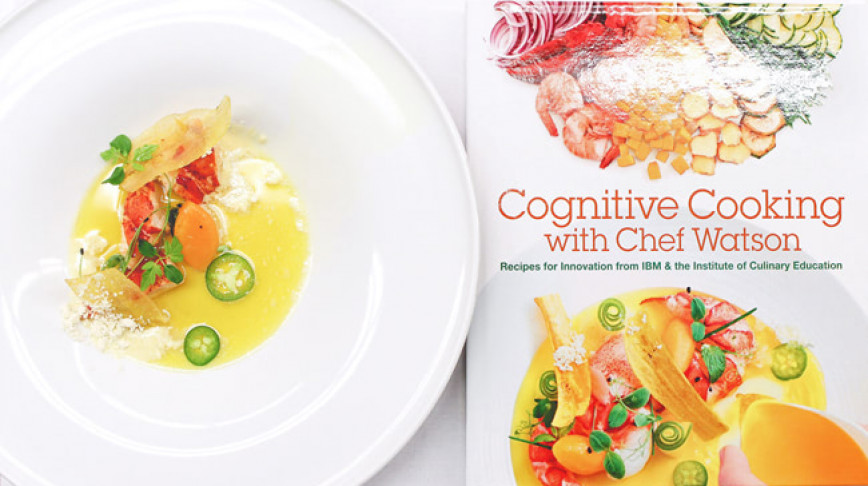

Koert van MensvoortWhile the Watson technology is exponentially increasing its processing power on an annual basis and steadily moving from answering trivia questions, to cooking advice, onto medical advice, it is about time we confront it with the million dollar question: "Watson, what do you want?".