Augmented (Hyper)Reality

Hendrik-Jan GrievinkAugmented (hyper)Reality offers a glimpse of an alternate universe, with augmented reality cranked up to the next level.

Augmented (hyper)Reality offers a glimpse of an alternate universe, with augmented reality cranked up to the next level.

The new Bob Ross has arrived: his name be Gautam Rao. This painting shows a magnified part of the dock in OSX. I especially wanted to highlight the Picassoesque face on the Mac …

News at Seven is a set of preferences for what a newsreport should be about. Using keywords entered by the user, the program selects news site RSS feeds and specific stories to …

As a kid I used to think that factories produce the clouds (not really, but it sounds nice). Later I found out that clouds produce clouds. Via flickr

Always wanted to take that parachute jump from the airplane, but never dared to? Why not try an indoor sky dive? Less interesting view, but more floating time. You can jump in …

Baudrillard intersects with social media in the world of fake online girlfriends.

Digital becomes physical. Move your cursor using this mouse cursor. You can buy one here .

Today CNN.com shows a picture of a television tuned to CNN, in order to inform its viewers about nuclear tests in North Korea. Somehow I feel this is related to our investigations …

The work of photographer Michael Wolf, amazing photos of buildings, plants, food and furniture. And unicorns. His website is definitely worth a look .

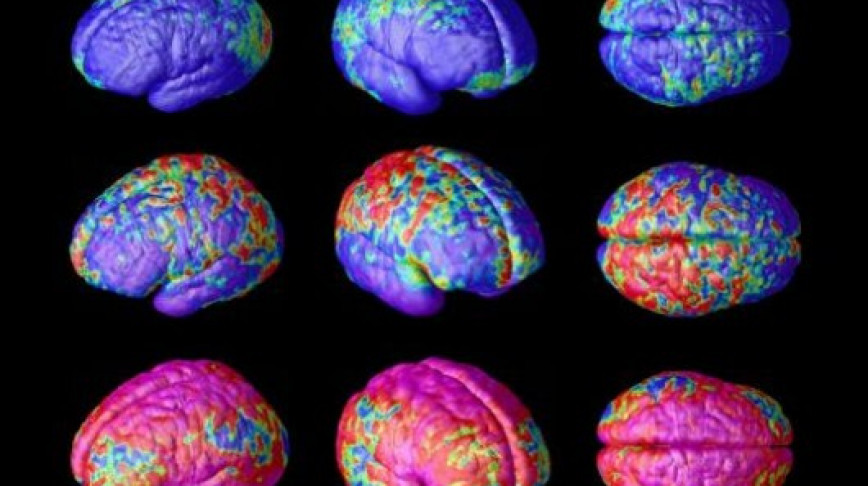

A robotic hand controlled by the power of thought alone has been demonstrated by researchers in Japan. Subjects lay inside an MRI scanner and were asked to make "rock, paper, …

T-Mobile is the proud owner of a trademark which says it owns the colour Magenta. T-Mobile and its parent Deutsche Telekom, have trademarked magenta. The move means that if you …

First we had the internet. We stored all our texts and images in databases. Then came search engines to locate the data. Now is the time to interconnect it all; to share knowledge …

Remember no-tech? Back in the days, we would ocassionally write down things with a pencil on paper. If we made a mistake, we would rub something that looks like an delete button …

http://www.youtube.co/watch?v=fW0BZsOcZZE It's like... the future! Check website .

You can't beat the real thing, but then again, some generations seem to prefer the other choice. Competition between cola brands goes back a long time. Over the years, the two …

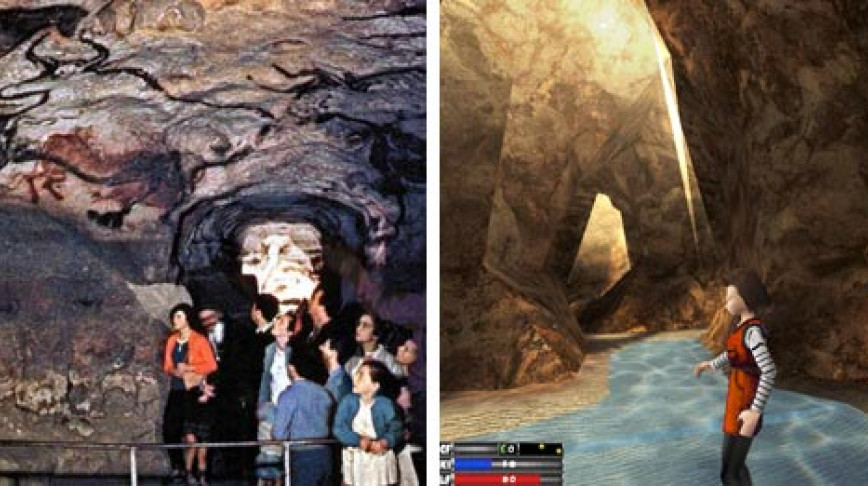

The Lascaux cave conserves some of the first images created by man, dating back to around 15,000 BC. The depiction of large animals on the cave walls is considered as a …

Which of these women do you think is more beautiful? At the 2002 Miss Germany pageant, the eight finalists were photographed from the same angle without makeup. The portraits were …

I don't know how it is with you, but to me sending smileys in emails or text messages always feels a bit primitive. It's not that I don't like the icons, I really do. I just don't …

...where no one has googled before! Of course this would be possible one day: browsing the universe from your desktop. Google did it again and unlocks space, the final frontier …

Will Wright's hugely successful games SimCity and The Sims let players shape the structure of urban areas and the lives of virtual humans; his upcoming game, Spore , lets them …

Disney's Sleeping Beauty Castle could be the single best-loved structure in the world. Ever wondered where Walt Disney got his enchanting fairytale aesthetic? The castle, which …

The Mona Lisa is the most famous painting in the world. Just about everyone knows the mysteriously smiling lady. But how many of us have actually been to the Louvre to see it with …

GRONINGEN (NL) – Low-income people in the Dutch city Groningen should be able to buy a flatscreen television, according to the alderman of social affairs Peter Verschuren. The …

(Please don't click this video away too soon; it's self–explanatory and your time will be rewarded). mindhacks.com

Animation studios working in the field of architectural practice like Squint/Opera create glimpses of the future that are so beautifully convincing, you sometimes wonder if the …

Some months ago Iran did a test to show off their missile power which turned out to be quite embarrassing because one out of three did not work. Fortunately, they had a good copy …

In the Netherlands, there is a raging debate about a movie nobody ever saw. It seems that images have become so powerful, we don't even need them anymore to scare the hell out of …

Male - BMW, Armani, Durex - is looking for a Female - Dolce & Gabana, New York Times, Victoria's Secret. Branddating.nl is a (serious) dating site that relies on the …

This is the first viral internet movie I've seen that's actually aware of being a viral. I just can't wait until this happens for real -including the car stunts- when will virals …

So, if you don't have a clue what is going on here, imagine how that horned cow on the electricity pole must feel. Extrapeculiar image of the week.

As a kid we used to eat a cereal breakfast with rice pops. On the package of the rice pops there was a little blue bird called Dodo, who seemed to enjoy the rice pops as well. One …

Green electricity, Organic Shampoo, Jaguar convertibles, Red Bull, Bio Beef, Alligator gardening tools, Camel cigarettes and Puma sneakers. Once you develop an eye for it, it is …

Beautiful images by Mikel Uribetxeberria, I think that he also made videos out of this work. Or I saw something exactly like it in a gallery in Chelsea. Gimme more

These peculiar illustrations are part of a sixteen-page pamphlet produced to put inside the postage-paid, business-reply envelopes that come with junk mail offers. Every envelope …

Nice adbust in Berlins subway. Photoshop panels were put over the image to make reassure us that nobody is actually born that beautiful. More images . See also: Photoshop Beauties …

Although this TED video has been all over the web and commented on this website already, it still deserves a separate post: Desigineers Pattie Maes and Pranav Mistry of the MIT …

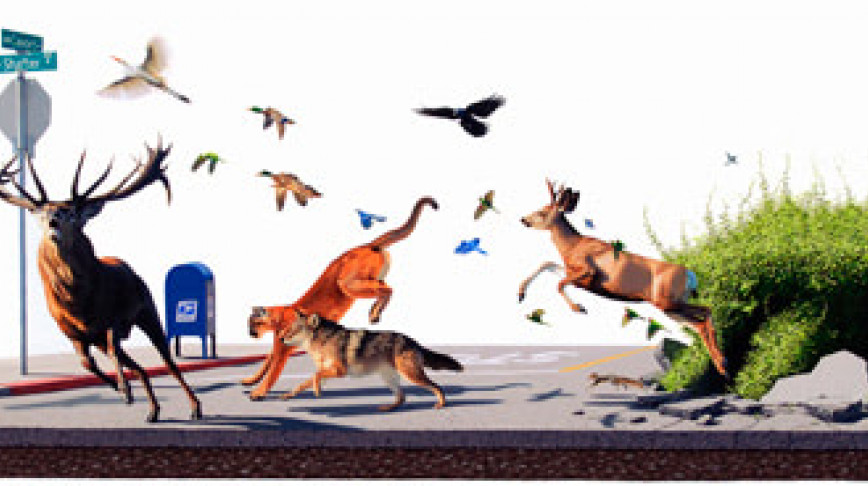

Ever wondered why there is so much competition in the world of operating systems? This video made by Leon Wang illustrates that "old nature" mechanisms like survival of the …

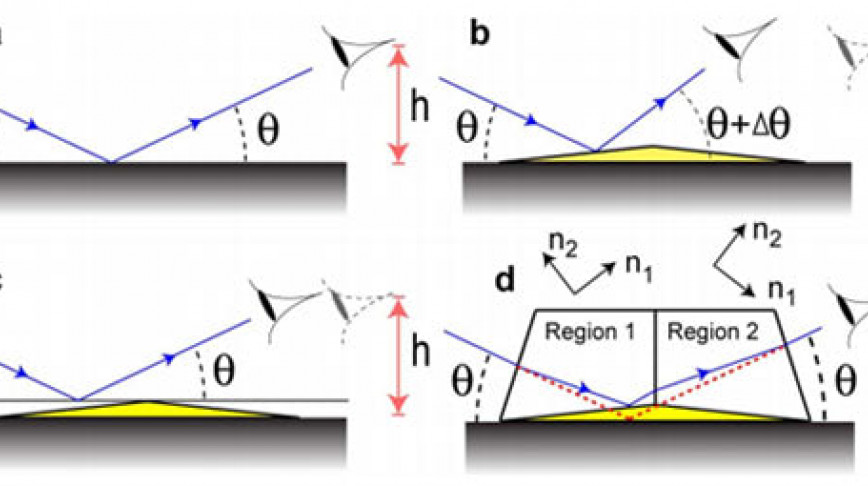

Some years ago scientists managed to build a rudimentary invisibility cloak, which was an impressive device but it had some important limitations, not least of which was that it …

![Visual of MANKO & Plagiarism [#1]](https://nextnature.net/media/pages/magazine/story/2010/manko-plagiarism-1/b50dcc7ae7-1602632753/manco-530-868x486.jpg)

In this first review of the works of Manko, we'll discuss the complex sorts of plagiarism in Augmented Reality art that are typical for our contemporary art scene. This introduces …

![Visual of Manko & The Invitation [#3]](https://nextnature.net/media/pages/magazine/story/2010/manko-the-invitation-3/a58a0af2b6-1602632753/manco-2-530-868x486.jpg)

Last month we discussed how the vacuum played a big role in Manko's life since he lost his right leg. Soon he developed this idea further, beyond the mere notion of extensions …

Surely we are quite attuned to some unexpected flavors in these quarters, but this Nano Care™ Blueberry Paste Wax wins our syncretic mash-up award for combining technorethoric …

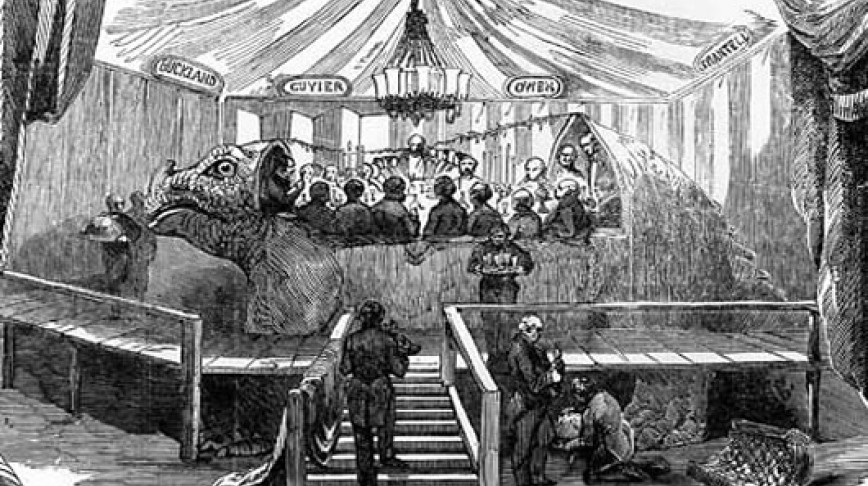

What happens when next nature dreams of old nature? Such is the case with extinct animals that have ever come in contact with humans, particularly the dinosaurs, our own …

Indulge in the paintings by Alex Gross . There is 'something' next nature about them... If happen to have more information on what that 'something' is, feel free to enlighten us …

Named after the story of a city girl that washes her hair with pine-needle shampoo and one day walks in the woods with her daddy says "Daddy! The Woods Smell of Shampoo", this …

Long for farm-fresh eggs on the table? Dream about going to bed each night worrying about racoons, rats and foxes? Like the feeling of scraping chicken shit off your hands? For …

You may want to spend 24-minutes on this Close Personal Friend. Made in 1996, this film anticipates contemporary phenomena like social media and self-branding.

Image consumption in the overdrive. Peculiar image of the week. Created by Erin Murphy, Victoria Bellavia, Yong Jun Lee & Sanggun Park . Thanks Jeroen van der Meij.

Why does your food look different in advertising than it does in the store? A Canadian McDonald's marketing manager tries to answer this common question with a behind-the-scenes …

As the NANO Supermarket opens discussions on the ethics, purpose and usability of nanotechnology, Frederik De Wilde is researching its artistic possibilities. De Wilde is a guest …

Convenience store chain 7/11 is serving up the latest in a line of futuristic near-foods: Instant mashed potatoes from a Slurpee-style spigot. The machine dispenses a stream of …

Imagine what you could do if you had one million Twitter followers. You would be so rich! Now seriously: Are followers becoming an alternative currency? Perhaps, although we are …

Computer-controlled players in video games can usually be spotted for their repetitive, illogical or unemotional behavior. Unlike humans, non-player characters (NPCs) don't get …

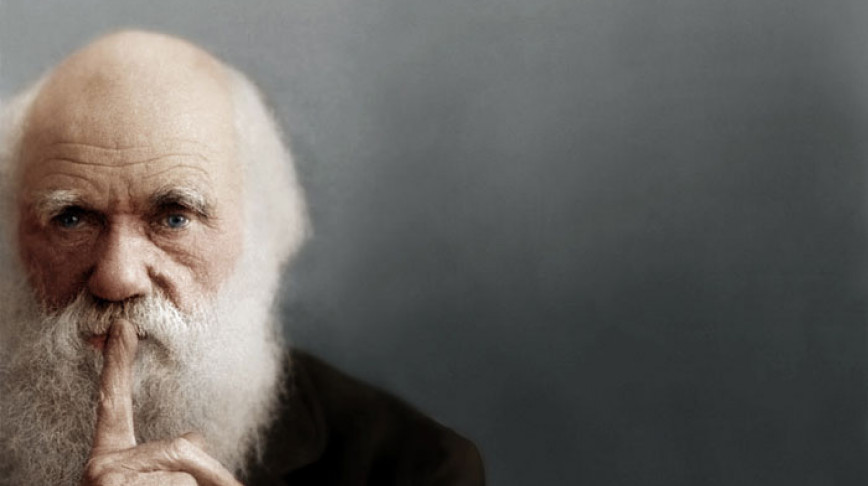

Is a colorized antique photo more or less "real" than the original?

You too can experience a life of grinding poverty in South Africa – with free WiFi and optional breakfast.

Cheetos and other junk foods are more carefully engineered than the average bridge.

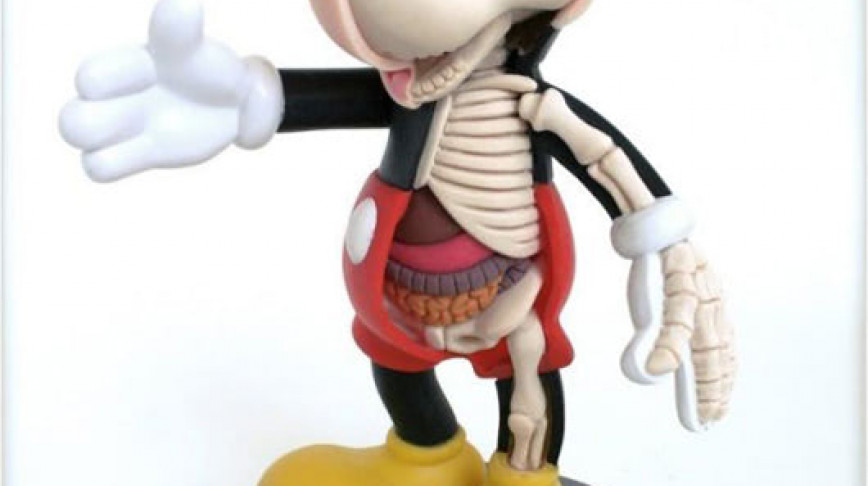

Finally a full anatomical model of the renowned comic figure.

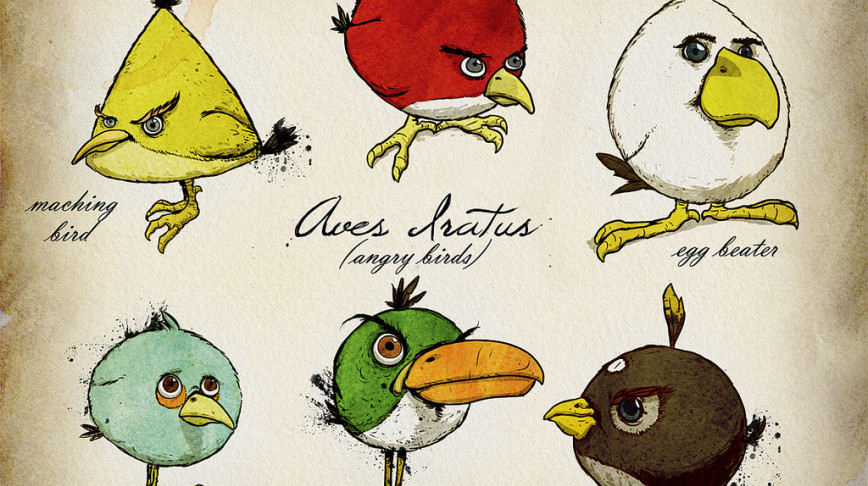

Why are these birds so angry? Probably because they don't appear in the scientific literature.

Supersonic jet replaces windows with massive live-streaming screens.

Inspired by the movement of spider webs in the wind, Dutch designer Jeroen Van Der Meij created Breathing Lights.

In Japan, fake food industry represents a century of old crafting tradition and a multi billion business.

An artificial illumination that is "authentic" enough to replace natural light.

Most people nowadays know more logos and brands than bird or tree species. Go test your own knowledge. Take a look at the leaves and logos above and see how many you can identify without looking them up.

Realistic WOII movie Fury had to cater to the gaming generation with hyperrealistic Star Wars style laser shooting tanks.

Will these futuristic signs become part of our near future?

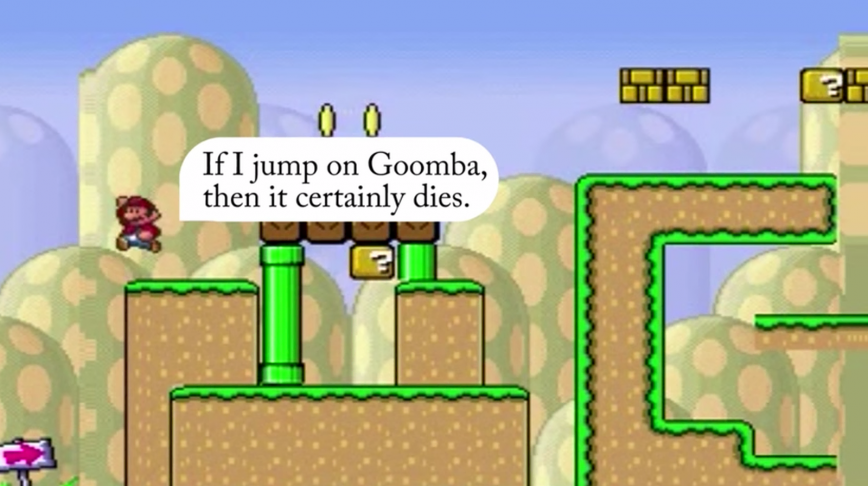

Super Mario is now able to learn and feel in the confines of his 8-bit universe.

There is a new artificial skin technology that could give humans magnetoception in the future.

America artist Mac Cauley created a new way to experience the painting in virtual reality.

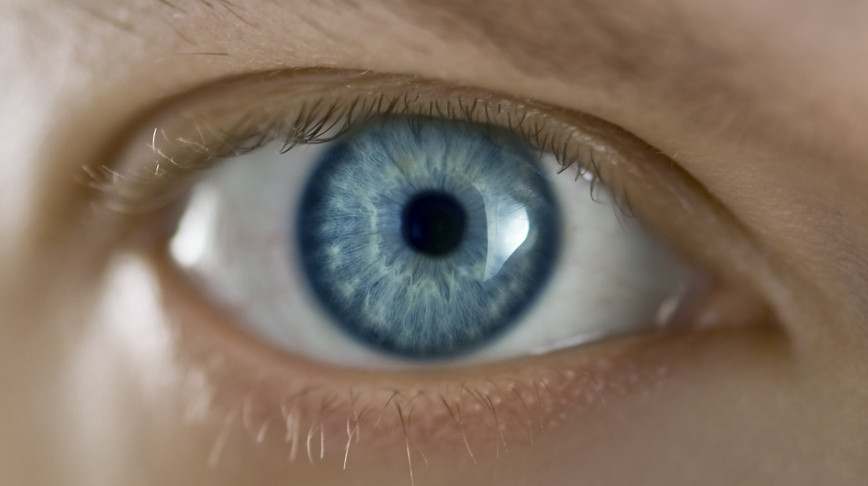

A new laser surgery can permanently turn eyes from brown to blue.

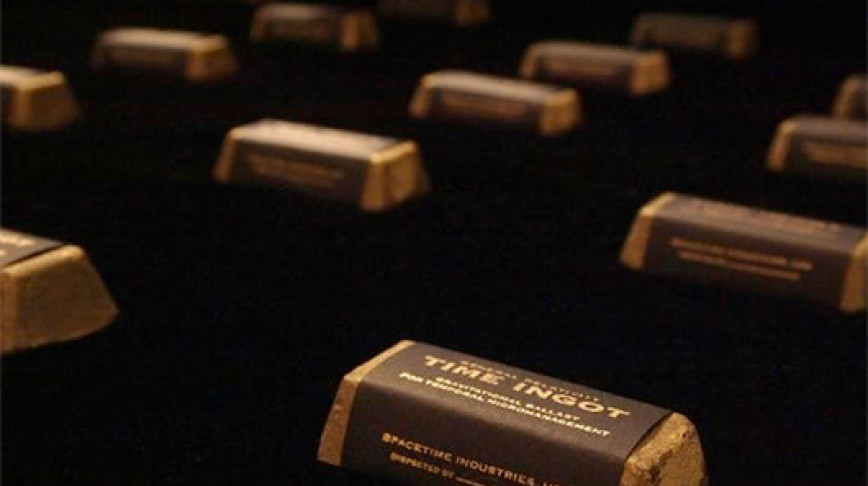

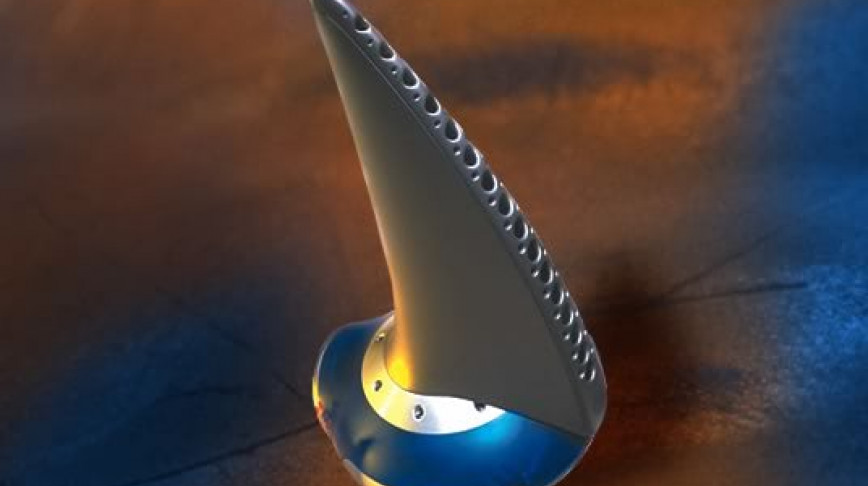

Time Ingot is a solid piece of lead alloy, its mass can slow down time in the immediate vicinity.

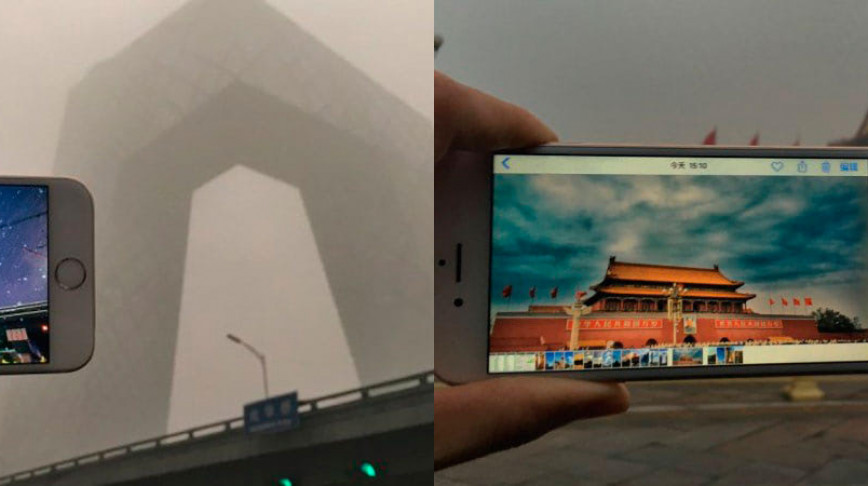

A few days ago, these images of iconic buildings in Beijing as they look with and without intense smog have been posted on Weibo, one of China’s most popular social media …

While a hipster-drink bacteria are hunting space organisms, robots are catching whale snot in the open ocean.

Solarium, a video installation by NASA’s Solar Dynamics Observatory (SDO), puts visitors in the hearth of the sun.

Hitech is changing sports, but what are the consequences?

Pokémon Go is shaping social relationships amongst individuals.

The first uterus transplant in the United States raises the question: could uterus transplants allow men to get pregnant too?

A miniature piglet staring at you from a soup, to remind you the origins of your meal.

The Brand Killer augmented reality headset boomerangs ad blocking into the physical realm.

Virtual reality experiment tries to help people frightened of death with outer-body experience.

Lyrebird is an AI model capable of synthesizing anyone’s voice from just a one-minute audio sample.

Discover the cellular world with stick-on camera lenses for your smartphone.

The way we interact with the technology in our lives is getting progressively more seamless. If typing terms or addresses into your phone wasn’t easy enough, now you can just tell …

The simulacrum is never that which conceals the truth--it is the truth which conceals that there is none. The simulacrum is true. -Ecclesiastes If we were able to take as the …

Fakeness has long been associated with inferiority. Fake Rolexes that break in two weeks, plastic Christmas trees, leaky silicone breasts that cause cancer, imitation caviar. Even …

One might think this video is just another witty flick made by some media artist. It is not. This is a real bird living in Southern Australia. In order to attract females, the …

An interviewer once asked Pablo Picasso why he paints such strange pictures instead of painting things the way they are. Picasso asks the man what he means. The man then takes out …

For the Venezuelan Magazine Platanoverde , Gabriela Valdivieso y Lope Gutiarrez-Ruiz interviewed artist/scientist Koert van Mensvoort and discussed some of the idea's behind Next …

This is one of the weirdest things I ever saw... It's a commercial for a construction company. The text goes a little bit like this: Anabukikonten is "Anabuki Construction Co." …

Written by Debbie Mollenhagen PART 1: FROM LINEAR TO CIRCULAR Designer living has become designing life. I often ask myself: did it taste like the real thing? But when I open my …

A version of the hit reality TV show Big Brother is to be staged in the online virtual world Second Life. Fifteen international Second Life residents will occupy a glass house on …

Biosphere 2 is a manmade closed ecological system in Oracle, Arizona. Constructed between 1987 and 1989, it was used to test if and how people could live and work in a closed …

"Become a part of history." and "Your journey begins" are just two quotes from the Trace your ancestry with DNA website. That says enough about the business behind it, but what I …

Now with Google smart look. Don't worry, it is science fiction (still). Created by Sean Hamilton Alexander.

Take back the field. This past weekend, the OSU Linux Users Group descended on a field in Oregon to create a 45,000+ square foot crop circle of Firefox.

A group of manufacturers are selling canned oxygen. It comes in flavors and it's a bit like bottled water: a thing that you can get for free but might pay for anyway. But why …

Hypernose is a short movie made by students of the HKU/KMT. Klick to view it here Found at Lucky Coin Films

Incognito is a new skin cream line that let's you be unrecognizable for the CCTV cameras on the streets and public spaces like banks, city halls ATM's etc. …

Toasted bread as an information display device (originally developed in 2001 but somehow still intruiging): a toaster that parses meteorological information from the web and then …

The construction of this nucleair powerplant near Kalkar (DE) started in 1973. After the build was finished, in 1991 it was decided not to use it for political reasons. The …

Tringo is an online multiplayer game created by Nathan Keir (aka Kermitt Quirk) in December 2004 that runs inside the virtual reality platform Second Life. It is described as a …

A transformation of the big flashing arrows from the computer game Need for Speed Underground 2, NFSU 2 to physical space. Although computer games generally try to imitate the …

PictoOrphanage opened its gates to all abandoned creatures we thought should be saved from falling into oblivion. these helpless, lonely waifs and strays could be found …

The machine and the experience of the machine are becoming one. I've seen these kind of things in computer games, but now they are becoming real— Feels like plugging in to the …

The Sababa is the first real time lava visualiser kit of global city flows. It takes the lava lamp one step further by reconstructing it according to the language of the current …

Leonard Knight started this massive desert art project in 1985, Niland, California. For nearly 20 years he created the landscape with every shade and type of paint ... and just a …

Second Life is a privately owned, subscription based virtual world created in 2003 by San Francisco-based Linden Lab. Founded by former RealNetworks CTO Philip Rosedale, Second …

The world's oldest profession has made quick inroads into virtual life. You can make a quick buck if you're willing to accept in-game money for sexual services -whether that's …

Is it possible to let a first sketch become an object, to design directly onto space? This is a question that Front Design have just asked in their project Sketch Furniture. "The …

Fascinating old nature. Somehow I find it hard to believe these weather photos are not computer rendered. Must be because of my Hollywood-special-effect-conditioned mind.

The pattern on the Animal Sweater suggests a new way to experience commercial imagery. The Animal sweater, designed by Karl Grandin, was first shown at The Biggest Visual Power …

How to design an expierience of retrieving in nature in the middle of Holland's second-biggest city? Design a park, built entirely from used railway sleepers. That was the basic …

The largest indoor beach in Europe, near Berlin. www.my-tropical-islands.com

Scientists are looking for the most beautiful woman in Germany... At the 2002 Miss Germany pageant, the eight finalists were photographed from the same angle without makeup. The …

This concept is a cutting board that has an integrated scale within a defined area on it's surface. This allows a person to both cut and measure ingredients on the same surface …

Enologix makes software that predicts how a wine will rate in reviews even before it is made. It claims that wine quality can be measured chemically, and a score assessed, much …

The toy export map of the world. The toy import map of the world. The way we map things can make a world of difference. Worldmapper's maps transform traditional cartographic …

What happens to your digital presence when you die? Does your website, MySpace and Second Life live on? Nope, it goes to mydeathspace.com Funny enough, the Amsterdam based …

The AR+RFID Lab at KABK (Royal Academy of Art, The Netherlands) sends this marker card, wishing an Augmented Christmas. Funny they need a real tree for hanging those markers... …

The Augmented Cognition System will help the brain adapt to data on computer-screens. If there's too much/little data, it will decrease/increase the information for you. If the …

This is not a mountain, this is a building. Expedition Everest is a "themed roller coaster" attraction created by the imagineers of Disney's Animal Kingdom in Florida. …

Electromagnetic radiation of active mobile phone sets off the LEDs in relative proximity. Light shadow follows the conversation through the space. …

Cory Arcangel hacked and modified a piece of hardware'the cartridge on which the game "Super Mario" is stored, or rather, was stored to produce "Super Mario Cloud" This is …

NeuroSky (founded in 2004) innovator in "wearable" bio-sensor/signal processing systems and SEGA TOYS are developing mind-controlled computer games and next generation consumer …

This must be the best worst software concept I've seen this year.. An email mailbox represented as a 3D, virtual LAX airport. in this world's coolest email program, based on the …

At the beginning of the digital era, several metaphors from the physical world were transferred to the digital environment in order to make, otherwise incomprehensible, technology …

It's fascinating how stock traders can get so excited about a few abstract numbers on an electronic display. These numbers represent real money, and every small fluctuation can …

No no, don't worry! These hypernatural animals aren't the genetic surprise of some mad scientist, it is artist Sarina Brewer resampling, remixing and stitching together her Custom …

Just in case you hadn't seen this one . Informational spaces define physical spaces, rather than the other way around. The map is the territory? Let's just hope the territory can …

This copy of Rodins "Le Penseur" / "The Thinker" (1880) shouldn't have that much on its mind... By using laser-technology, Korean researchers have crafted the microscopic version …

It is true, they are rare, but they do exist: girls with tiny waists and large breasts. Occasionally, women are simply born like this. The only problem they have - in a world …

Did you have your Out of Body Experience today? We knew already that our nature is merely how we perceive it, but now scientists from the Ecole Polytechnique Federale in Lausanne …

The Ironic Sans blog brings you Pre-Pixelated clothing! Stop worrying about whether or not the producer of that Reality TV show you're on will pixelate your carefully chosen …

Phone, internet, television, photography, even books or music: today it is hard for the mind to escape from virtual worlds. A day without communication or interaction could make …

Anyone can explain Baudrillard with this move. After the recent passing-away of this philosopher, it seems appropriate to post this movie as an ultimate "Simulacra for Dummies". …

After Magritte; Surrealism in the 21th century. Peculiar image of the week, created by Isabel Lucena.

The Objectuals is a series of work by artist Hyung Koo Lee , it features an interesting mix of visual distortion apparatus for the human body. Lee says that he experienced …

For all you self-Googlers out there. And yes.. that means you too! Back in the old days (pre-next-nature) you used to wear gold rings and lots of bling you show off your wealth …

Victoria has great realism, perfect imperfections, and incredible ease of use. Based on live human models she is trying to close the gap between real life and models typically …

Atkin's Architecture Group recently won the first prize award for an international design competition with this hyperreal entry. Set in a spectacular water filled quarry in …

Yes, I understand the media interest in Second Hype (Of course we don't take it serious as a virtual reality concept. Steering a mouse, sitting behind a flat screen, moving 3D …

Unfortunately this stylish refugee is merely an advertising fata morgana.

Tech company Fuijtsu released a new way to look around you while driving a car. Following the old fashioned neck turning and mirroring this tech has cameras around the vehicle to …

Proctor and Gamble, the maker of Pringles , has successfully argued in a British court that their product is ‘not a potato chip’. Pringles are also officially now, not potato …

Face swapping software finds faces in a photograph and swaps the features in the target face from a library of faces. This can be used to "de-identify" faces that appear in …

Ask a child to draw a mountain and you will get something like the Matterhorn. The shape of this famous mountain, situated on the Swiss-Italian border in the Alps, is one of the …

Some days when we look in the mirror we like what we see, some days less so. Would it not be great to be able to tweak the image a bit? After all, it is our main manifestation to …

The White House, seat and symbol of the US government, is built in a Roman style, which was in turn inspired by Greek architecture. At left, we see it applied in the Villa …

A local Chinese newspaper, The Beijing Times , revealed some of the staggering fireworks at the opening of the Beijing Olympics were actually not fireworks, but computer graphics. …

Last week, the BBC reported a colony of penguins that had gained the ability to fly (video) . The penguins were reported to fly thousands of miles and spend the winter in the …

Peculiar image of the week. Source: Worth 1000 , via Trendbeheer . See also: Mona Lisa goes LA , The Photoshop Beauties .

Visiting the American Natural History Museum in New York, I pictured myself in about, let's say 150 years from now, trying to explain my younger family members what nature looked …

The struggle between the born and the made is being fought out in a wardrobe on Saturn. By: 1stavemachine.com | Related: Sixties Last

(Extracted directly from Pink Tentacle , thanks!): Researchers from Japan’s ATR Computational Neuroscience Laboratories have developed new brain analysis technology that can …

"Google has stationed approximately a million computers worldwide to be able to process the 40 billion searches per month. One search request uses as much energy as a low-energy …

Second Skin takes an intimate look at computer gamers whose lives have been transformed by the emerging genre of Massively Multiplayer Online games (MMOs). World of Warcraft, …

WARNING: Spending too much time behind the computer, may cause the leaking of elements from the digital environment into everyday life. Self control freak Olivier Otten is …

But they love dinosaur! Judge for yourself whether this dinosaur nugget product is supposed to give kids a history lesson on the evolutionary relation between dinosaurs and birds …

Are we on the verge of a future human evolution, one that isn’t, at least in it’s very core, “the survival of the fittest”, but rather “the evolution of the richer”? Think about …

Usually we don't realize the hours of photoshopping spent on magazine and advertising images before publication. The raw photographic material reveals a lot about the fine craft …

The Telepresence Frame is a domestic object which utilises the fact that one's bodily functions are digitised in order to create a new form of telepresence. Allowing loved ones to …

Videogame players celebrated this week as a hotly anticipated sequel to the popular online video game World of Warcraft hit the shelves. The nine million gamers can now play a …

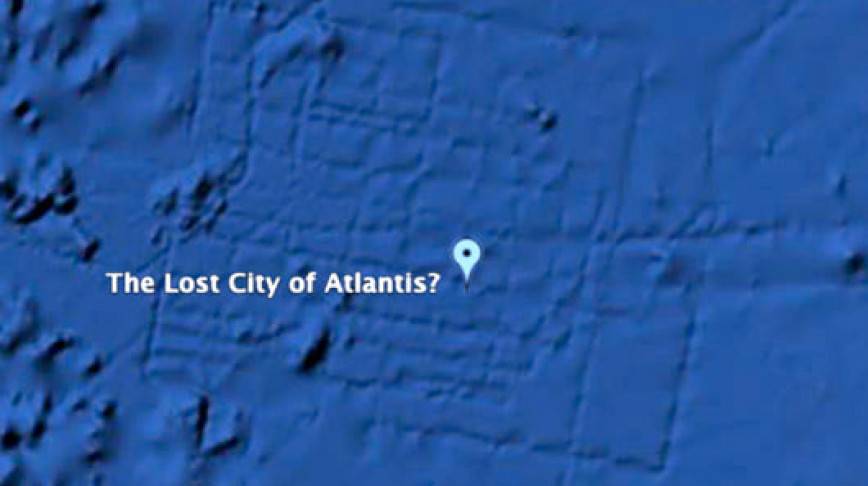

Since the days of Plato, the lost city of Atlantis has captivated the imagination of many. The city, if you don’t already know, was said to be a naval power located roughly 600 …

This video shows the first beta version of TwittARound – an augmented reality Twitter viewer on the iPhone 3Gs. It shows live tweets around your location on the horizon. Because …

Jim Reinders, an experimental artist with a history of using curious media, became so enthralled by the beauty of the famous Stonehenge in England that he had to recreate it. …

Remember the days when the flavor of a fruity drink was simply connected to an apple, orange, strawberry, kiwi, or perhaps – if you felt really exotic – an acai berry? Nowadays we …

A future in which prosthetic patches prevent bodies from aging? Or a sexists view on femininity in robotics? Either way, the question is whether they are up- or downgrades of …

Our beloved King of Pop, Michael Jackson, who died tragically at the age of fifty after suffering cardiac arrest, was one of the most widely beloved entertainers and influential …

There is something about the work of Josh Keyes. He might be the Bob Ross of our generation. He has site.

Never believed in pots of gold anyway. Related: Credit on Color - rainbow of credit cards | Datafountain - money translated into water | Ceci n'est pas une Roche | Faked Fireworks …

Internet porn graphics transferred to real life objects. A nice photography project by Jean Yves Lemoigne .

Today browsing and gaming is dominated by the shortcomings of machines, for machines simply do not know who is on the other end. Man needs to interact; therefore man needs to …

In next nature, video game characters become part of your everyday environment, however I am no sure if I would hire this guy to do my plumbing. This hyperreal Mario was created …

Lenka Clayton : A series of five digitally repaired images taken in Lebanon of buildings damaged by the 2006 conflict with Israel. The images were taken specifically for this …

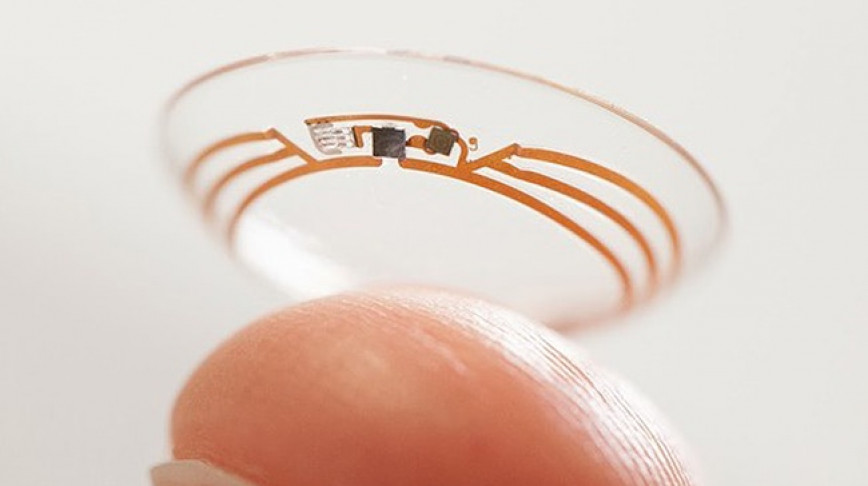

Getting information as fast as possible and on the spot is the trend. So what could be more direct than having information fired directly into the eye? Today - together with his …

How SecondLife ends up to be a virtual chicken farm? Watch Patrick Davison explain about the rise of Next Nature on the social platform. Image by Kaie Magic | Related: Confetti …

In the classic Milgram Experiment conducted in the 1960s, volunteers were told by an authority figure to deliver electric shocks to another person as punishment for incorrect …

Found sportswear a d, will be reality some day... (with magical goggles of some sort) Related: Recreation in NextNature | Big Ass Search Engine | If Giraffes lived in the US | …

Sind wir noch zu retten? That was the slogan of this year’s Ars Electronica festival in Linz (Austria). Titled ‘REPAIR’, the media art festival urged to leave our scepticism and …

Man is a flexible species. We tend to adapt quite rapidly to new environments. But how fast can these adaptations turn to new evolutionary traits? For instance: to what extent is …

With an optical trick, this German bottle of water is trying to prove its effectiveness for the body. Though drinking water is a necessity for life, the downside of this product …

Following in the footsteps of a Marco Polo-esque spice trade, next nature explorers Jon Cohrs and Ryan Van Luit travel by canoe past massive cargo ships and factories in search of …

At ISEA 2010 , the International Symposium on Electronic Arts, media artists and media researchers from all over the world present their work in Dortmund (Germany). This year, …

During the selection of the top ten of next nature movies we’ve doubted quite a bit between the Truman Show (1998) and American Beauty (1999). The Truman Show tells the story of a …

Urban intervention, naughty boy-style! The public media interventionists of VR/Urban have designed a cool tool to intervene into next nature: the SMSlingshot. A wooden, embedded …

Avatars are commonly known as virtual characters in the digital realm representing a user. But what if avatars could house personal history, profile and ideas? Could that enable …

During the 1960s, monkeys were sent into outer space as part of the US space exploration program. But they didn’t all return, until now. Via scaryideas.com | Related: WWF

Some beautiful "visual thoughts" by NL Architects . On the left: Minimum Speed 200 kh/h. On the right: United Airlines. On the left: desert irrigation made beautiful with this …

This video shows the design vision of Corning, a company that specializes in glass. Not just any glass, but glass incorporating technology, electronics and displays. And it sure …

In this Petcha Kutcha presentation, Mike Dickison comes to a very funny conclusion: Although Big Bird might superficially resemble other ratites like the ostrich or emu, he is …

Some beautiful images from games gone wrong. If you have more of them, post a link in the comments Via

Arne Hendricks thinks we can solve the ecological crisis by shrinking every human on earth to 50cm tall: http://bit.ly/sxxNr1 #powershow

This interview from 2008 is exemplary for a time when people started experimenting on humanizing anonymous avatars in the virtual realm. Shopping, building, going on holiday, …

The Animal Architecture Awards have just announced the winners of their 2011 contest. Taking first place is Simone Ferracina's Theriomorphous Cyborg , a (speculative) augmented …

This project explores the invisible terrain of WiFi networks in urban spaces by light painting signal strength in long-exposure photographs. A four-metre long measuring rod with …

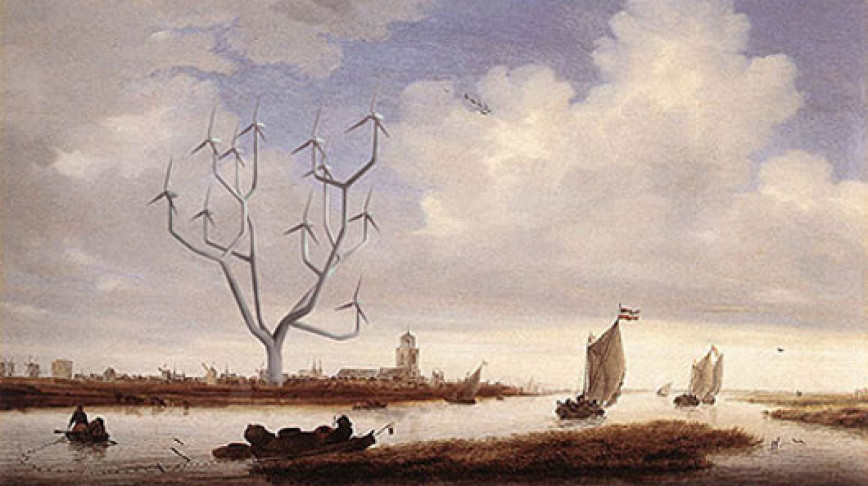

A new Dutch landscape with windmills up to 120 meters. Designed by NL Architects .

Text for blind people using the Braille alphabet has been around for some time. But instead of making separate books for the visually impaired, why not change the way we all read? …

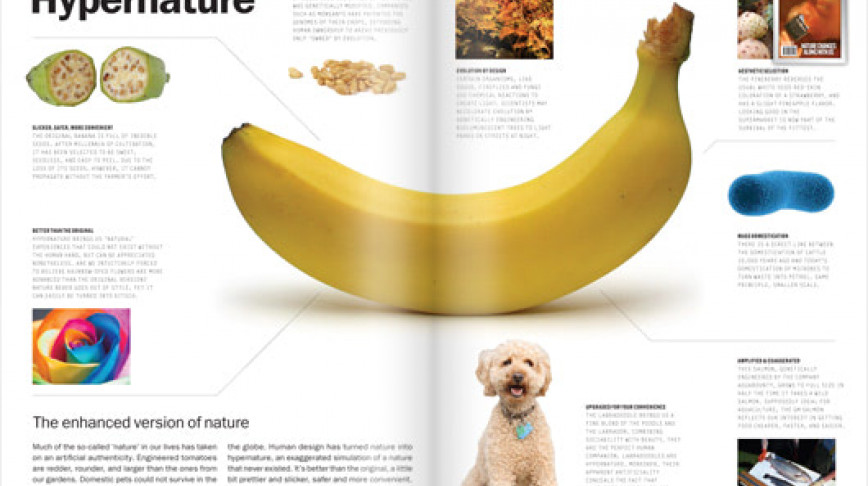

During the coming weeks, we will present a selection of our favourite pages from the Next Nature book . To kick the series off, we’ll start with a spread about hypernature; the …

Designed by Luc de Smet, Awear is a speculative bracelet that can detect and record the sources of allergies for children in uncontrolled environments, such as schools and …

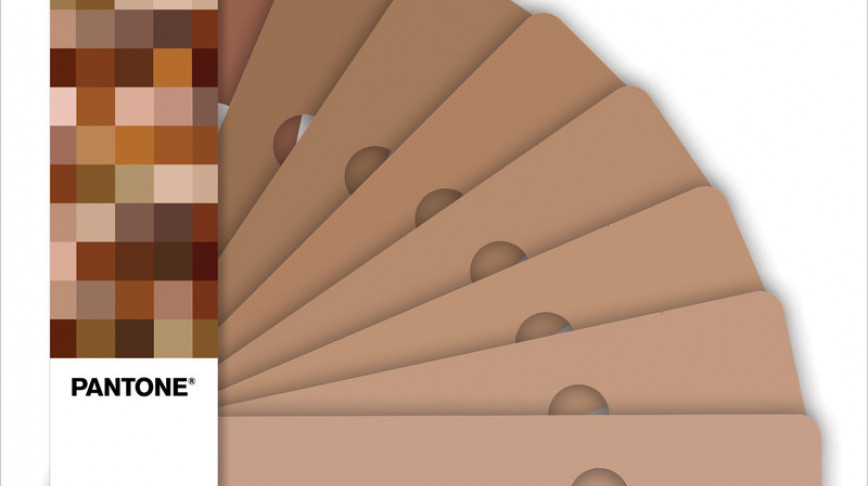

Should made-to-order babies become a reality in the near future, one piece of the design puzzle has been solved now Pantone has release their SkinTone system. Indexing 110 skin …

A while ago we wrote about the Pixelhead mask by artist Martin Backes . Now he informs us that the masks are for sale. Get one while they last!

Always good to see a swimming pool exactly where you need it. At San Alfonso del Mar resort in Chili they know how to cater people that love nature – except for the rocks, bites …

So you thought your live was already pretty much media-saturated? Indulge in the design fiction film Sight and you'll realize you ain't seen nothing yet.

Do you ever miss being able to smell the woods in an online travel journal? The odor of a new leather jacket in an online shop? Or perhaps you just couldn’t find the words in an …

Augmented Reality is supposed to be just over the "Peak of Inflated Expectations" of Gartner's hype cycle . This video, however, is vaulting the technology directly onto the …

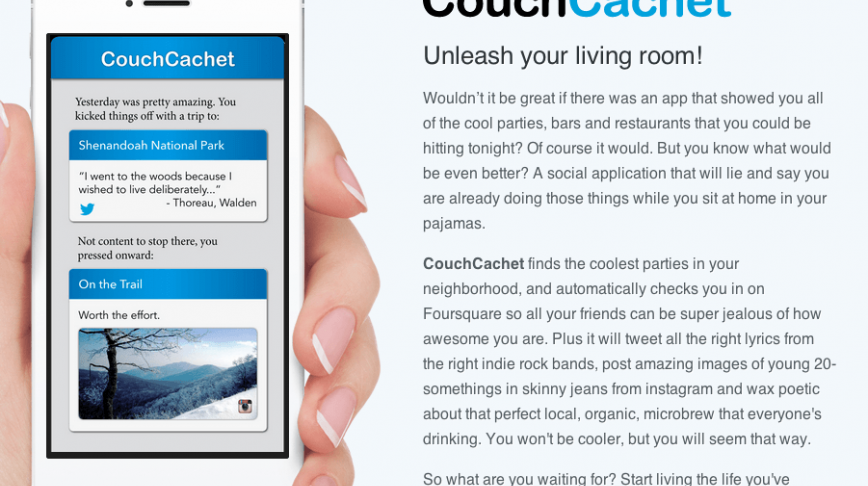

CouchCachet promises to give you the fully-booked, in-the-know life while you are on the Sofa.

Floris Kayak discusses online hoaxes and the future of technology.

The News Machine is a contraption that explains the news distortion that happens when a message is broadcast through different media. The starting point is a tweet sent from a …

An astounding tangle of multi-colored water flowing throughout 18 arteries represents what happens every day in the pulsating heart of Tokyo. This is how Takatsugu Kuriyama , from …

Because date rape drugs are odorless, colorless and tasteless, victims don't normally realize they've been attacked until it's too late. In a clever, necessary bit of information …

An artist visualizes WiFi waves across landmarks in Washington, DC.

All humans on Earth put together are not enough to fill the Grand Canyon. We are so small yet so present.

Besides finding their way, people look at to transport them to another place, or in this case, another perspective on planet Earth.

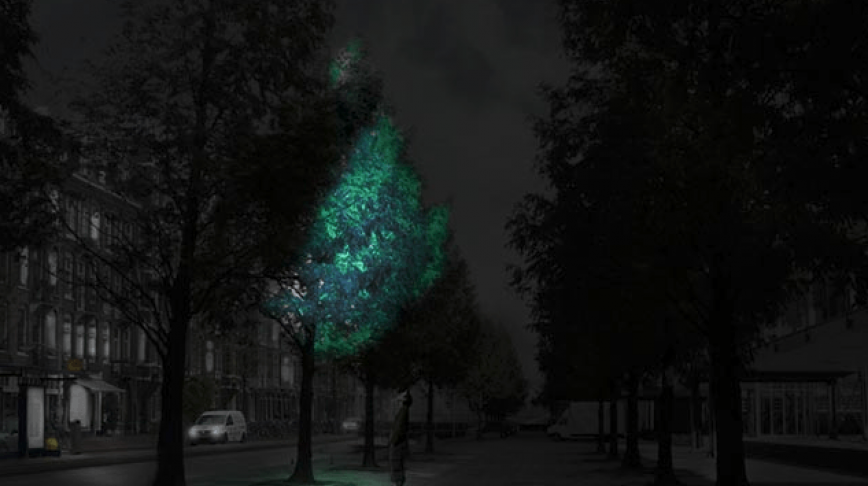

Daan Roosegaarde is exploring possibilities to replace streetlights with luminous trees.

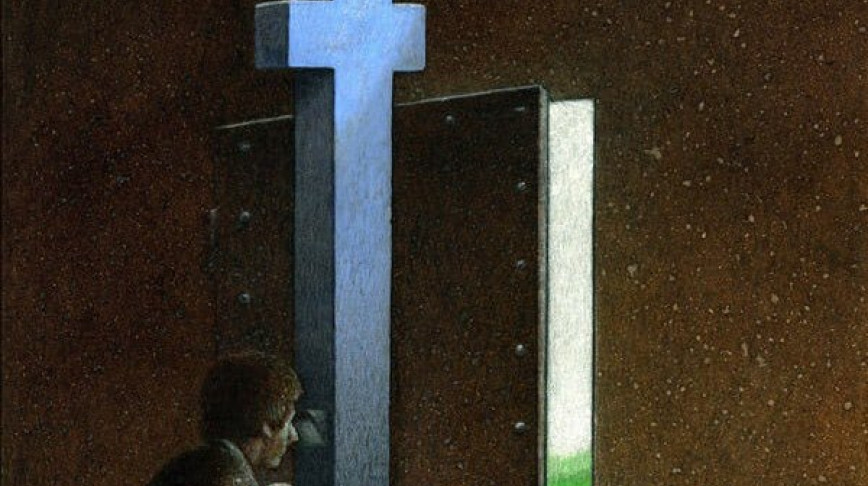

Pawel Kuczynski portraits the contradictions of today’s world.

Nike sneakers with lunar surface pattern, to celebrate the 45th anniversary of Neil Armstrong walking on the moon.

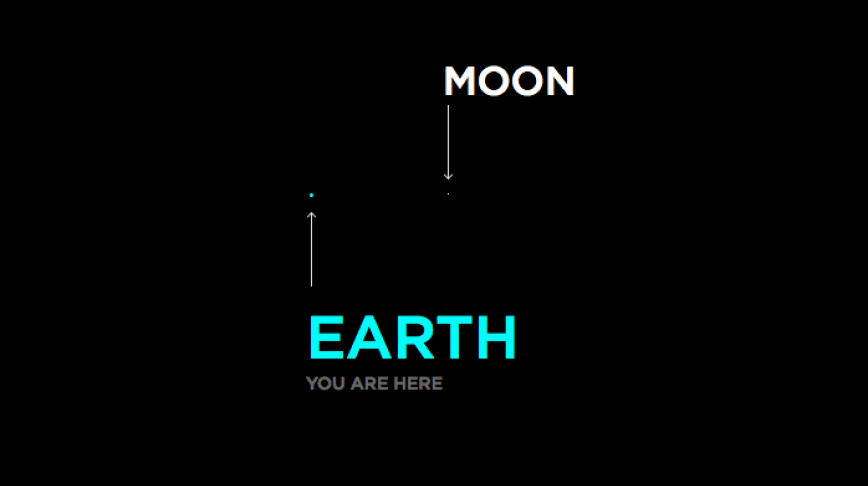

If the moon were only one pixel: a scale model of the solar system that shows how big our milky way actually is.

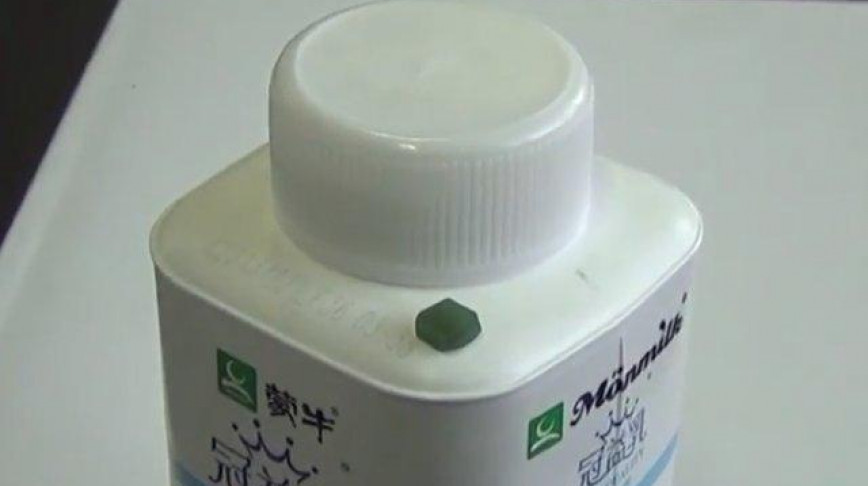

Smart Tags stick to containers of food and change color when something has expired.

Swimming salmon fillets confuse educate children about where dinner comes from.

Eyes of the Animal is an interactive project that invites the public to an uncommon virtual reality setting conceived especially for experiences in actual forests, giving the opportunity to see the world as an insect would.

The popular car brand is working on augmented reality goggles that enhance the driving experience.

The Smithsonian’s National Air and Space museum has taken the sun observation to the next level with a giant public display of images and data that show the sun in hyper-real detail.

San Bruno in California is the only city with a hearth-shaped neighborhood.

The Weather Machine: an installation that explores rain, clouds and sun heat to recreate the weather occurring outside.

Air New Zealand is equipping its flight attendants with AR headsets to explore in-flight optimization, giving its passengers a glimpse of what the future air travel service might look like.