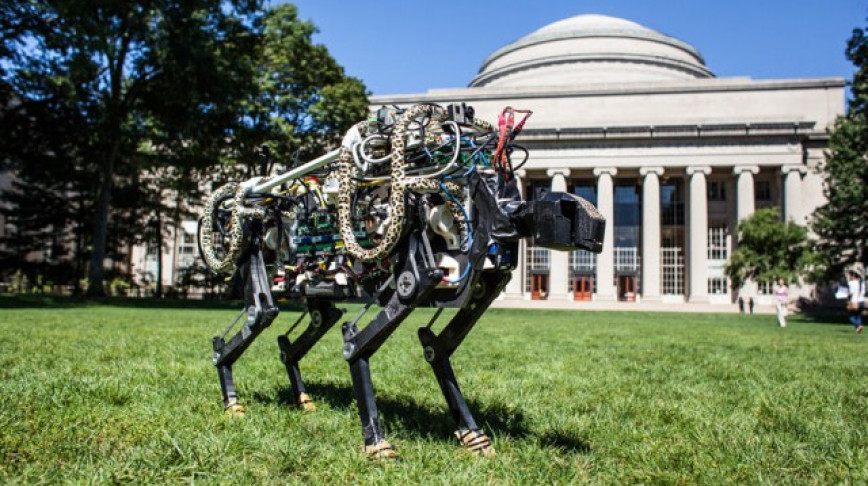

MIT Lets Robot Cheetah Off Leash

Hessel HoogerhuisRobot Cheetah has grown up! Scientists at MIT's Biometrics Robotics Lab have now trained their robo-feline Cheetah to detect obstacles and jump over hurdles as it runs, making it …

Robot Cheetah has grown up! Scientists at MIT's Biometrics Robotics Lab have now trained their robo-feline Cheetah to detect obstacles and jump over hurdles as it runs, making it …

We met Anouk Wipprecht and talked about smart fabrics and accessories that can listen to our body, therapeutic fashion and the future of dressmaking.

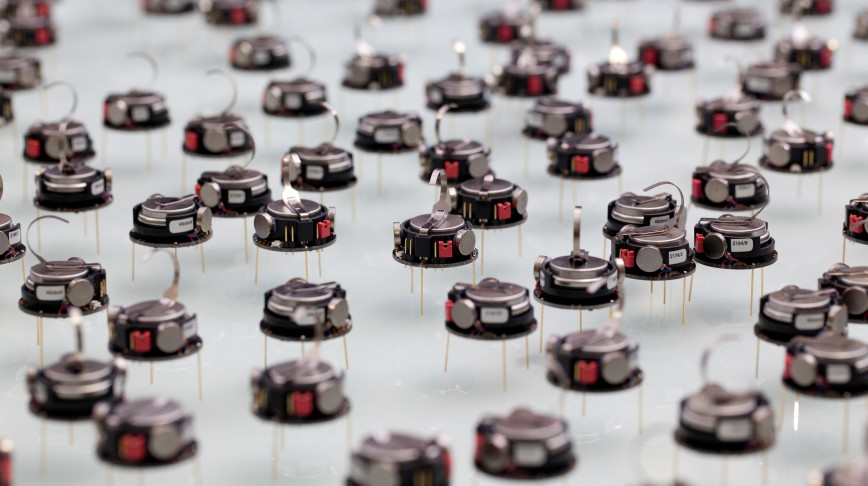

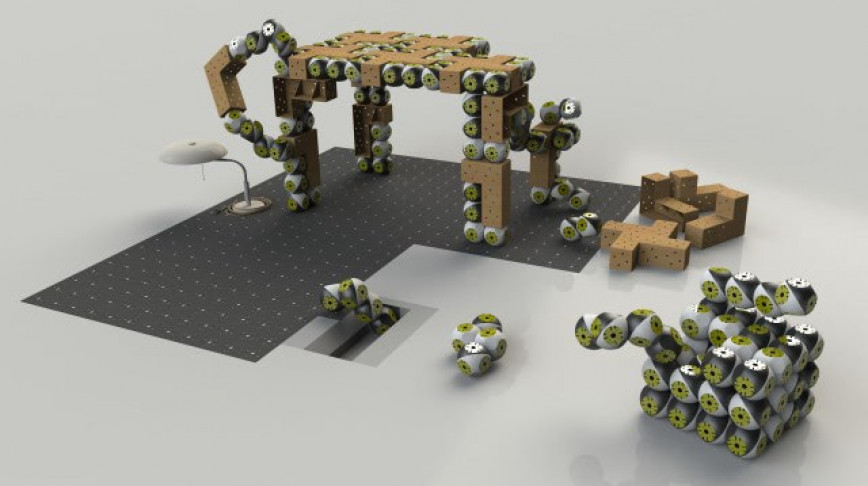

From flocks of birds to fish schools in the sea, or towering termite mounds , many social groups in nature exist together to survive and thrive. This cooperative behaviour can be …

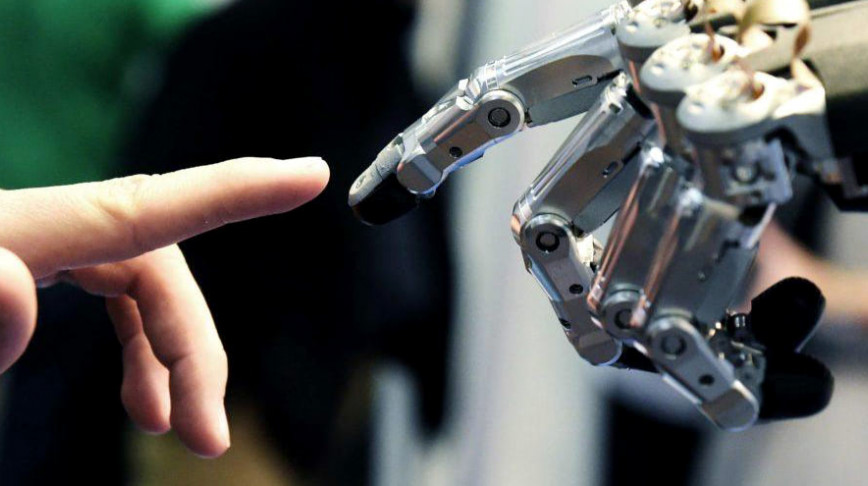

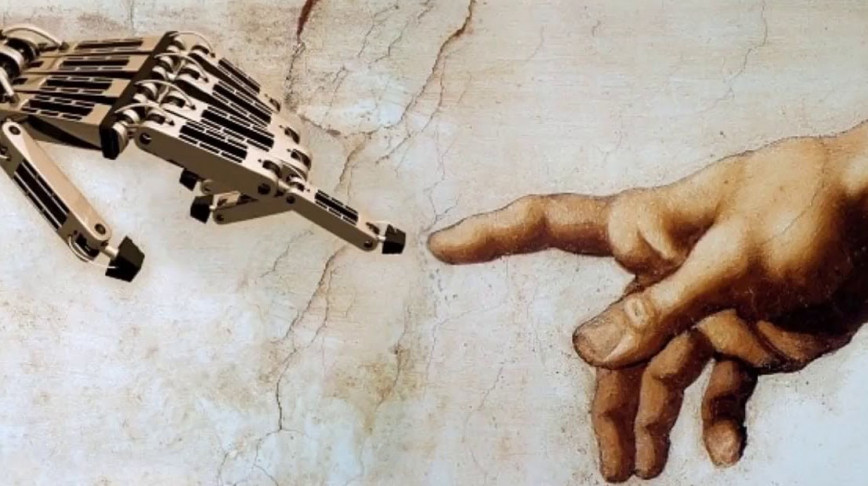

A horse can run faster than a human, yet nobody claims horses will make mankind dispensable. When a man rides a horse and the two work together, something new happens. Then, what …

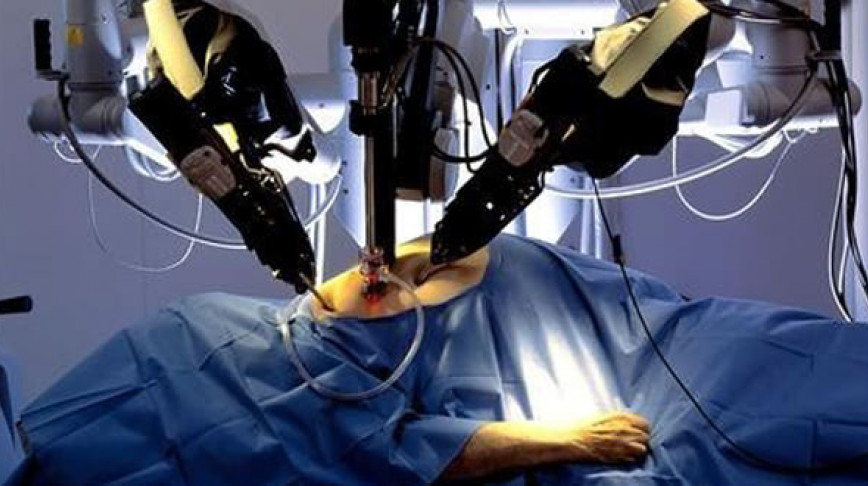

Conventional wisdom says that you can’t replace the human touch in terms of medical care, but in our rapidly changing technological environment, it appears that this perception …

At first glance, the premise of the film 'The Iron Giant' (1999) seems to be a total failure. An animation film set in the United States in 1957; while the Russian satellite …

What if your co-worker was a robot? Dutch startup Smart Robotics is a job agency for robots that allows you to hire a smart-robot.

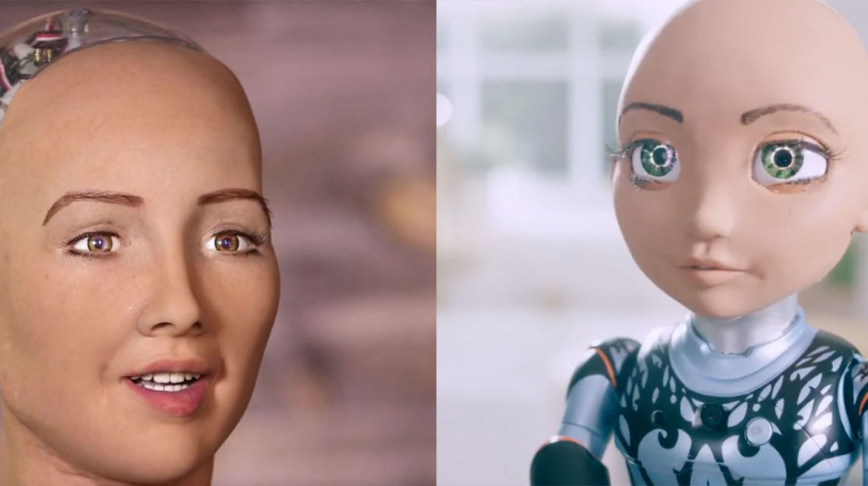

Robot Sophia is pretty much the international face of the ‘modern robot’. Sophia is the Audrey Hepburn-inspired humanoid robot who stands out for multiple reasons. She is the …

Sophia the Humanoid, a human-like robot in appearance and mannerisms, was granted citizenship by Saudi Arabia and became the first robot with citizenship.

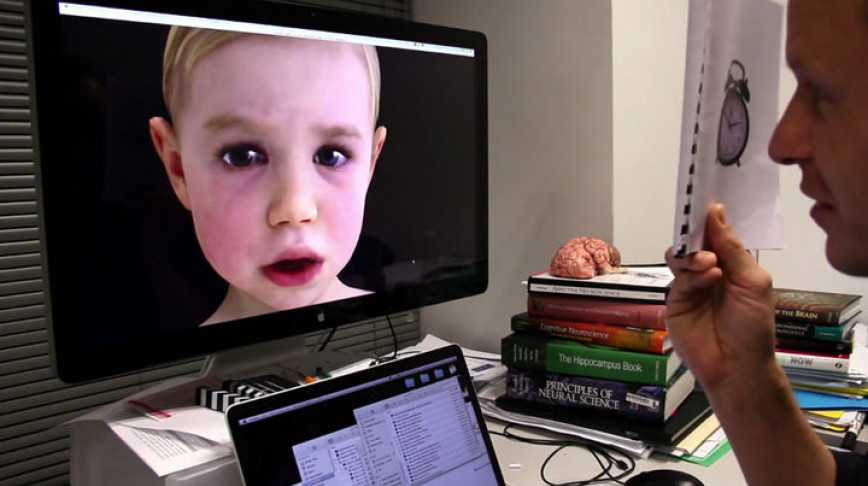

The goals of "understanding humanity" and "humanizing robots" are tightly related to each other. Infanoid Project is trying to relate robotics to human sciences in order to …

To thread the uncanny valley is a conscious choice for many artists and enthusiasts, as a means to evoke, through their work, powerful emotions, thoughts and everything in between.

The Octobot is the first of its kind made fully of soft materials and is aimed to pave the way towards a more safely interaction with humans.

Robots in health care could lead to a doctorless hospital.

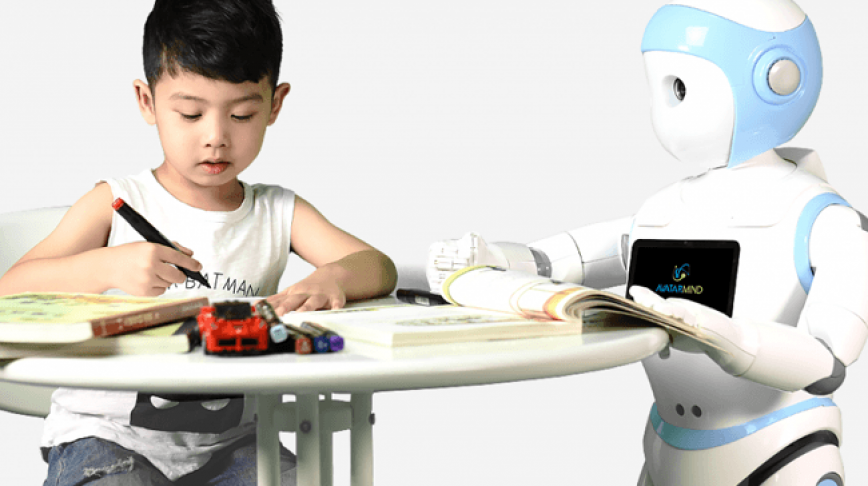

This robot nanny is designed to take on adult responsibilities, raising concerns regarding the consequences of using robots to raise our children.

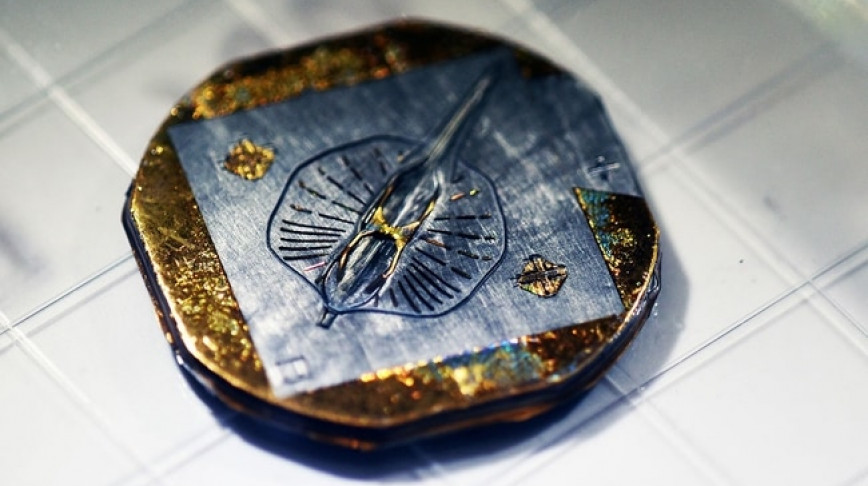

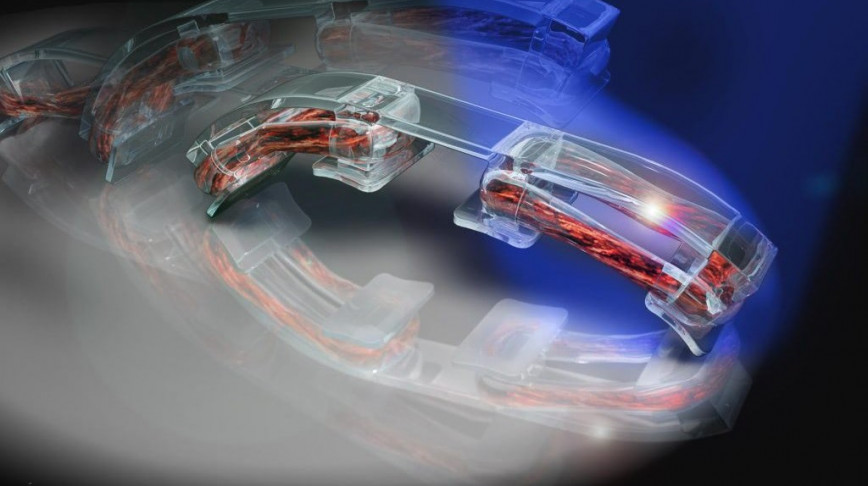

By way of reverse engineering and taking heart cells from a rat, researchers at Harvard University have designed a miniature robotic stingray that is alive.

The robots have arrived! Yesterday we celebrated the launch of HUBOT , job agency for people and robots at MediaMarkt , as part of the Dutch Design Week in Eindhoven. Our virtual …

Imagine being 16 and studying for a job that soon won't exist. What kind of future is that? New occupations will come thanks to robotization.

Occupying the 52nd floor of Tokyo’s Mori Tower, Mori Art Museum is internationally renowned for its visionary approach and highly original curation of contemporary art. The …

Scientists in Japan have developed a koi robot that swishes around in water just like the real, fishy thing, and does stuff real koi can't: swim in reverse and rotate in place. …

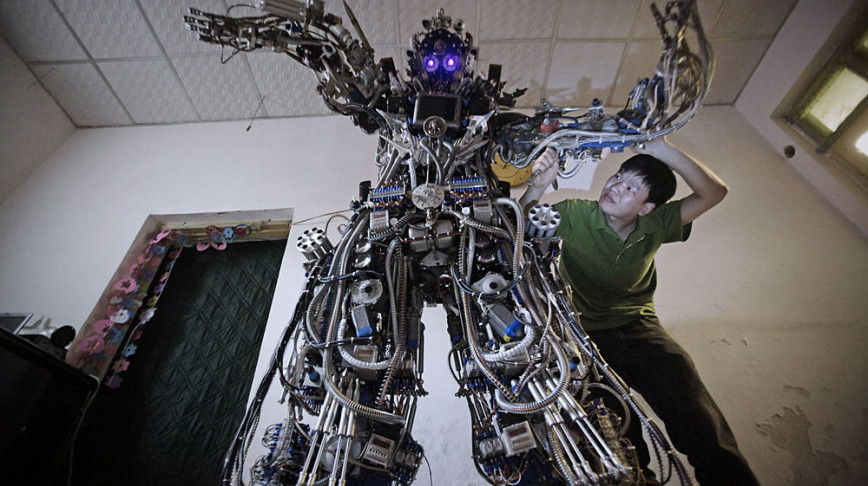

Comedian Paul Merton went to China for Five.tv (early 2007) and near Beijing he reads the following headline: This farmer grows robots...

While time seems to flow in this one direction, some people just know how to walk straight through that fourth dimension; catch it in a videoclip and throw the message into …

Exmovere Holdings , a biomedical engineering company, is focused on government and consumer applications for healthcare, security and mobility. This company developed Exmovere …

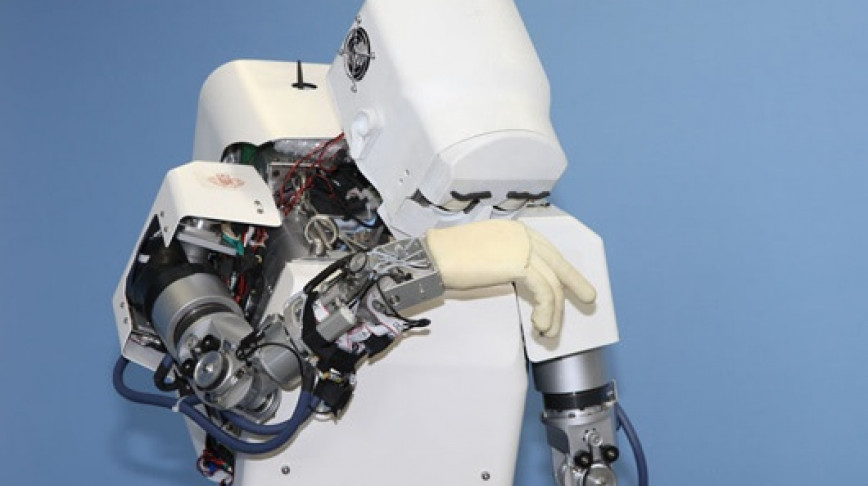

Meet KOBIAN, the humanoid robot that is not only able to walk about and interact with humans, but uses its entire body in addition to its facial expressions to display a full …

Waiter, there's a robot in my soup! Weighing only 60 milligrams, with a wingspan of three centimeters, robo-fly's tiny movements are modeled on those of a real fly. While much …

For past entries and an introduction to the 11 Golden Rules of Anthropomorphism and Design, click here. When a product imitates animal behavior, the strict social rules …

Electronic whiskers put sensitive catlike sensors on robots.

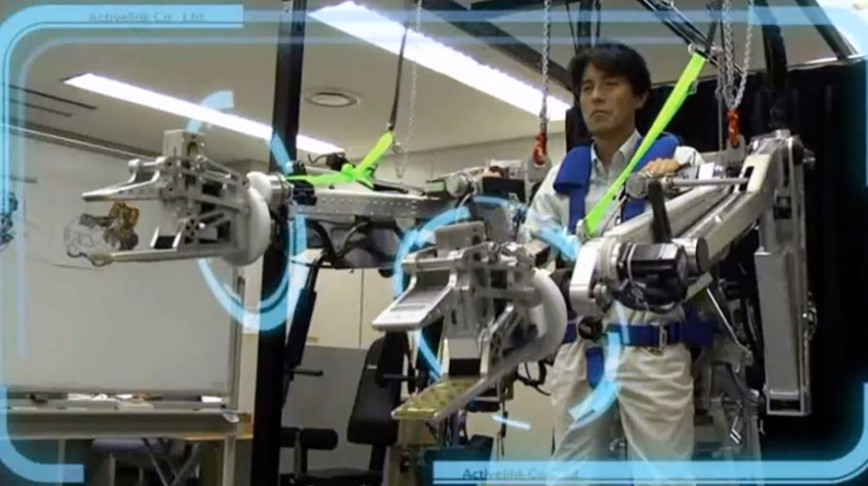

Power Loader: the power amplification exoskeleton robot.

The world’s largest indoor farm is not the only firm developing vertical agriculture. Japanese company Spread plans to grow more than ten million heads of lettuce a year by …

The Wearable Tomato Project consists in a robot that dispenses tomatoes.

Researchers at Carnegie Mellon University are developing edible batteries to power ingestible medical devices for diagnosing and treating disease.

Wanted: New jobs for humans and robots! Which designers, technicians, robot enthusiasts will help us with innovative, feasible ideas for the new jobs of the future?

An Australian study has shown the use of lifelike robot babies increased teen pregnancy, rather than discouraging it.

In the port of Rotterdam you might be able to cross a floating Waste Shark: a robot able to collect up to 500 kilos of trash.

A Bricklaying Robot builds low-cost houses in just two days.

What happens to robots that are no longer needed? In the future robots will be biodegradable.

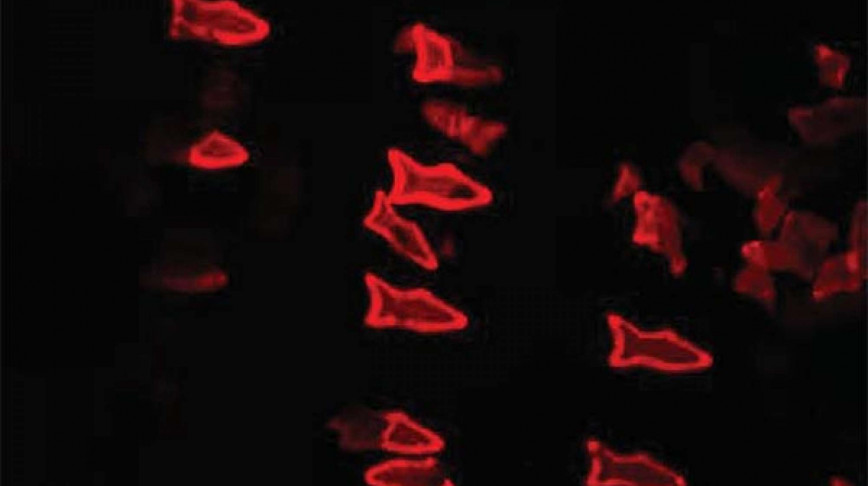

Where most researchers are focusing on keeping bees alive, researchers of Harvard are developing a bee replacement; the Robobee. The Robobee is only the size of half a paperclip, with ultra thin wings flapping 120 times per second. The main goal is to build a mechanic pollinator.

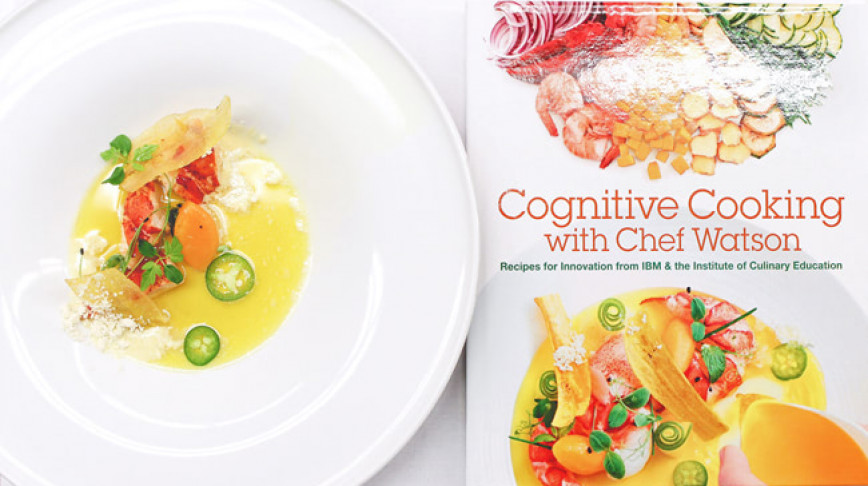

The AI chef nows more than 10,000 recipes from all over the world and is capable of combining any ingredient while following your personal food preferences.

A Biomedical solutions company is developing a system for insects to wear, allowing engineers to steer it remotely.

BladeRanger might have found the ultimate solution for solar panels cleaning putting drones and robots together at work.

We fear being replaced by robots. They have the potential to be smarter, stronger and more hardworking than us, but so do horses.

In 2017, a hoverboard can fly 164 feet above earth and able to hover over the Atlantic Ocean.

The home healthcare professional is one of the 16 positions from HUBOT, the job agency for people and robots.

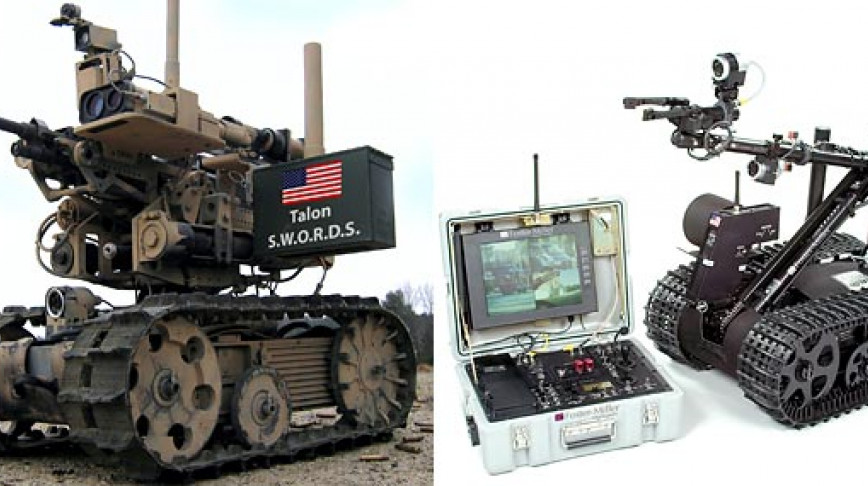

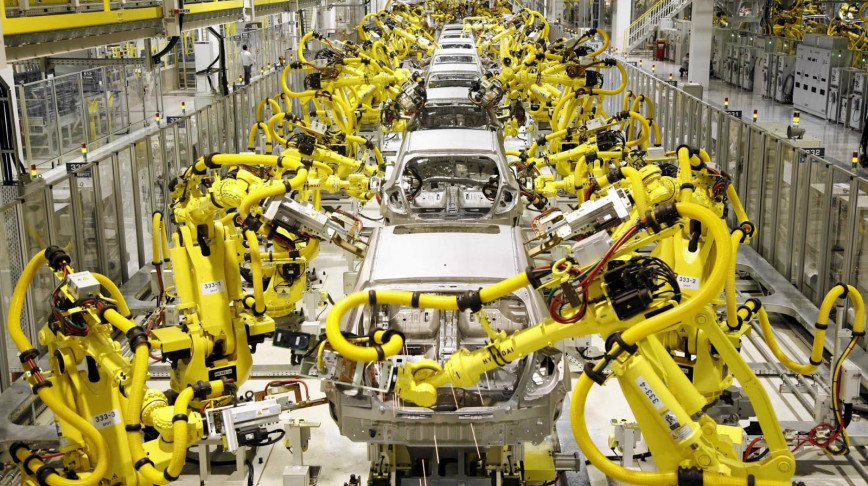

The robots are coming! They’re getting smarter, cheaper and more reliable. How long will I have my job before a robot steals it? The industrial revolution made muscular power …

We talked with Bas Haring to discuss the conception, image and will of the robot. Read our conversation with him and update your ideas about robots.

"Misbehaving (Ro)bots" asks whether technology could hope to replicate these small bothersome quirks that instill a feeling of intimacy.

Craftsmanship is generally very appreciated, but it takes a lot of time to master a craft. Therefore, as a robot coach you train - you guessed it, a robot - to transfer your …

A video of a robotic bee pollinating a flower (looking more like Loopin' Louie spinning off the board game and hitting a flower) recently caught our attention. What at first sight …

The first robotic officer will soon report for duty in Dubai.

Robots are getting stronger and smarter every day. They are taking over our whole lives. How long will I have my job before a robot steals it?

Over time, our bodies, our food and our environment have become more and more subject to design. As designers, we hold the responsibility and have the unique chance to envision …

We must be mindful about how we engage with technology: what we use it for, why, and whether it helps or hinders us. Sometimes our tech seems to be flowing in inhumane directions, …

Rule #1: A robot may not injure a human being or, through inaction, allow a human being to come to harm. Remember Isaac Asimov's classic three laws of robotics ? Surely thats …

There are robots that look like people, and then there are robots, like the Ecobot III, that look nothing like humans but have our same biological needs: they have to eat, digest …

The humanoid robot Yangyang can function autonomously, talking and gesturing while interacting with people.

A new report recommends that we decide the legal status of robots sooner rather than later. It even suggests that they could be given personhood and rights.

Mesmerizing drone ballet from Kmel Robotics and Lexus.

The Actroid robot holds clues for climbing out of the uncanny valley.

In the last months we've been witnessing a refugee crisis of huge proportions. More than a million people crossed the sea to flee violence in Africa and the Middle-East. Together …

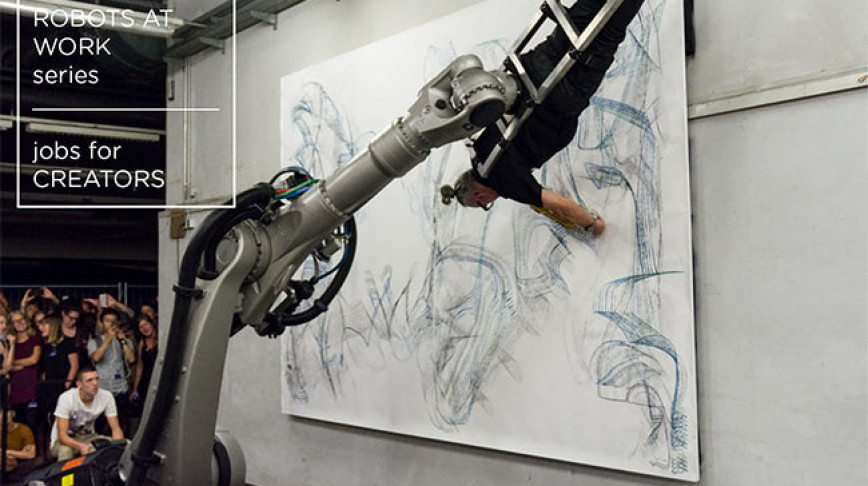

Have a look at our series Robots at Work! In this episode we present you five jobs for creators, the ones that build with their hands.

A robot inspired by a sloth was developed in order to control crops growing in our fields.

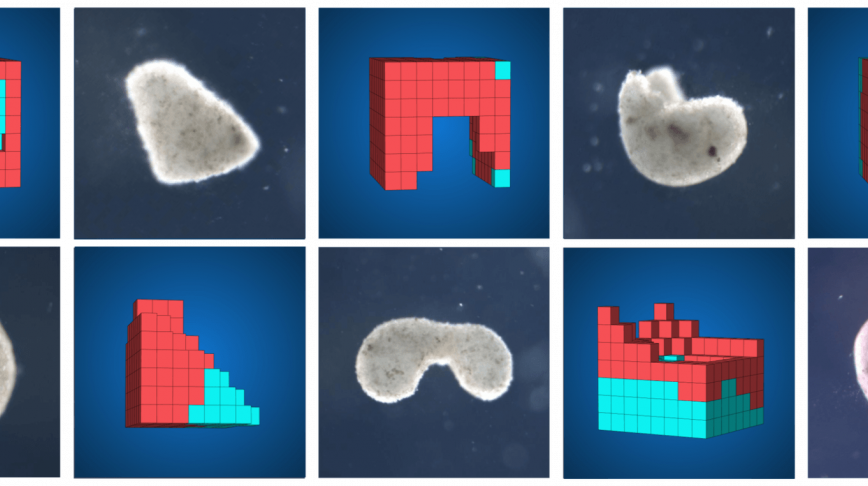

In 2020, scientists made global headlines by creating “ xenobots ” – tiny “ programmable ” living things made of several thousand frog stem cells. These pioneer xenobots could …

Although there are numerous researchers out there creating humanoid robots, none are as explicit about the close relation between anthropomorphism and narcissism as professor …

We posted this fella two years ago but the guys at Boston Dynamics haven't been sitting still. Improvements have been made and Big Dog got puppies! Little Dog (a learning robot …

A future in which prosthetic patches prevent bodies from aging? Or a sexists view on femininity in robotics? Either way, the question is whether they are up- or downgrades of …

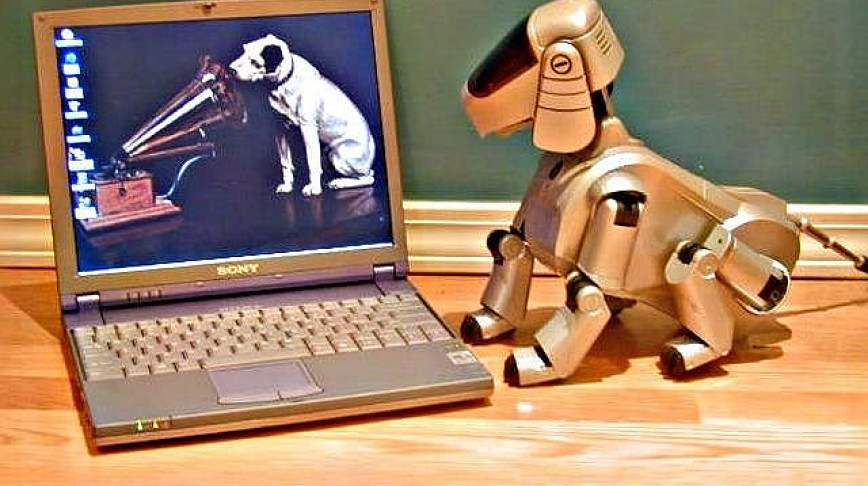

Tiger meets tiger. Apparently some of the owners of a vacuum cleaning robot feel the need to 'costumize' their device. We are now awaiting the witty bio-robotics company that …

Remember Asimo ? Honda has now developped a new Brain-Machine Interface technology that allows humans to control the humanoid robot simply by thinking certain thoughts. The BMI …

Professor Hiroshi Ishiguro ( Intelligent Robotics Laboratory at Osaska University) has done it again! This time in coöperation with robot-maker Kokoro Co. Ltd . Objective: to …

The Berkeley Robotics & Human Engineering Laboratory is researching a way to improve the performance of human bodies. They are doing innovative research in the field of …

Designer, artist and engineer Dan Chen has developed the ' End of Life Care Machine ', a machine designed to guide and comfort dying patients with a carefully scripted message. …

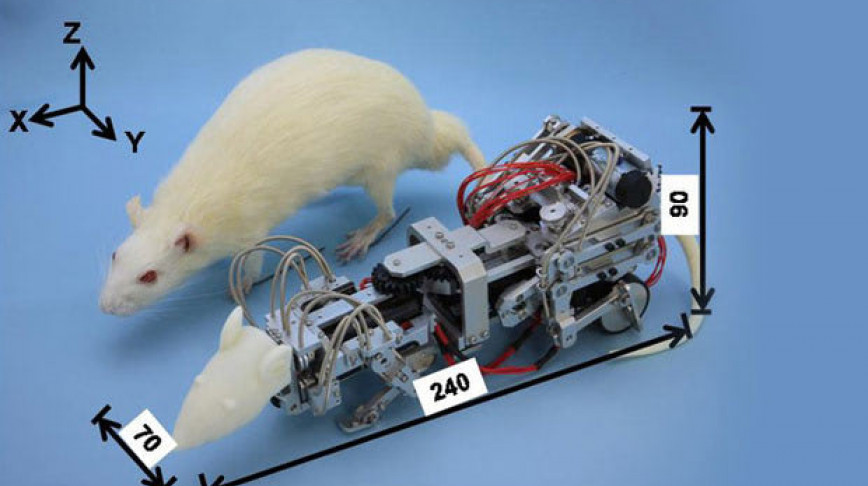

The robot in the picture above chases and attacks the living rat rights besides it. The W-3, as the robot is named, is designed to make rats seriously depressed. In fact, this …

Drones are the mosquitoes of the 21st century. A small town in Colorado will be voting on an ordinance for drone hunting licenses for shooting down the wild robotics.

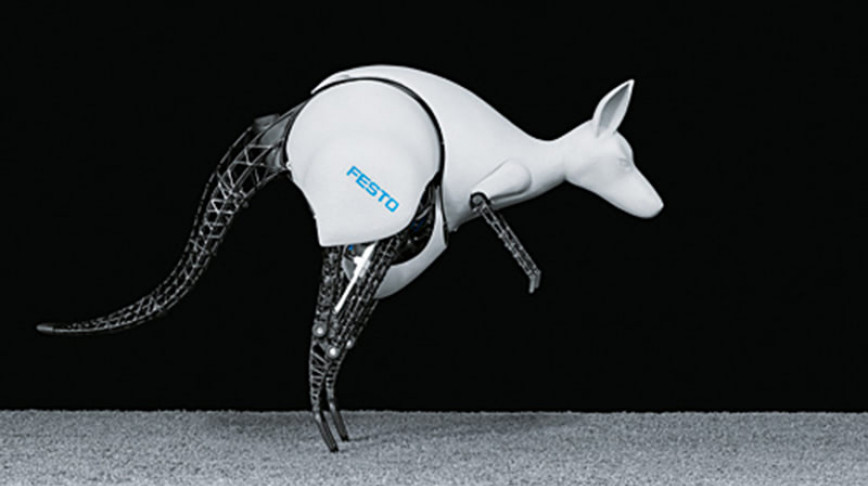

The BionicKangaroo technologically reproducesthe unique way a kangaroo moves.

The Campaign Against Sex Robots, recently launched, is pushing towards banning the continuation of sex robots development.

From chef, to nurse, and also lover. Get ready, a new generation of robots is going to invade our lives!

Dystopian future scenarios filled with evil robots are everywhere. We are afraid of robots treating us badly, but what will happen if it'll be the other way around? According to …

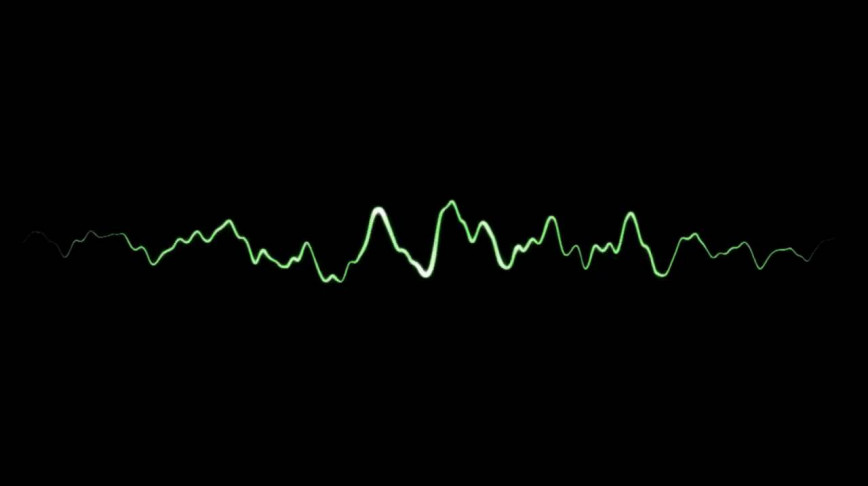

Researchers at MIT have created a system that is able to simulate sounds that are so perfect that they can even fool human listeners.

Lego announced the next generation of building bricks, bringing the creations to life.

An "electronic personhood" for robots has been discussed in the European Parliament recently, raising big questions about equality, citizenship, legal and ironically, human rights for artificial intelligent machines.

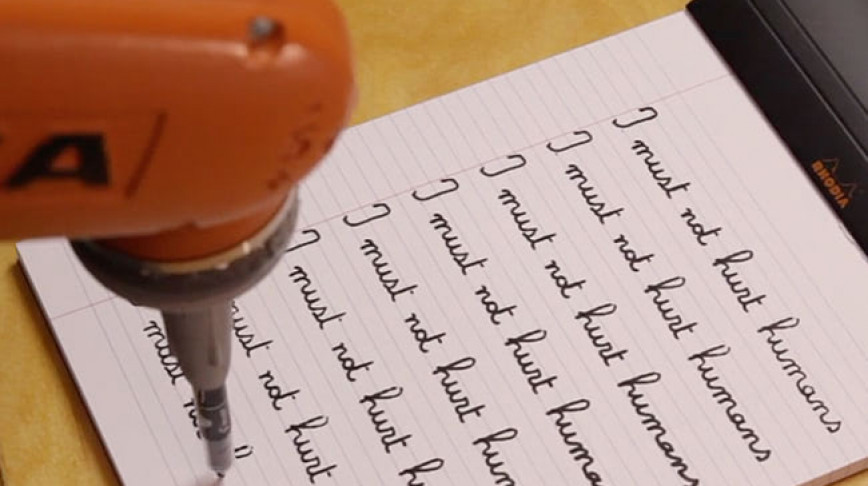

The Punishment is an installation featuring a robotic arm that mimics a kid's handwriting perfectly, and repetitively writes "I must not hurt humans".

This is post number one of our serie 'Robots at Work'. In this episode we present you five jobs for caretakers, the ones that work with their hearts.

Meet the Robo Wunderkind, a new breed of smart toys that introduces children to the basics of coding and robotics in a playful way. Advanced technologies are increasingly embedded …

As we showed with HUBOT , we can use new technologies and robotics to make our work more enjoyable, interesting and humane. Aside from our speculative jobs, a lot of robotic …

Delve into the science and fiction of robots at V&A Dundee’s latest exhibition: Hello, Robot . Contemplate the existence of robots and how they have both shaped, and been …

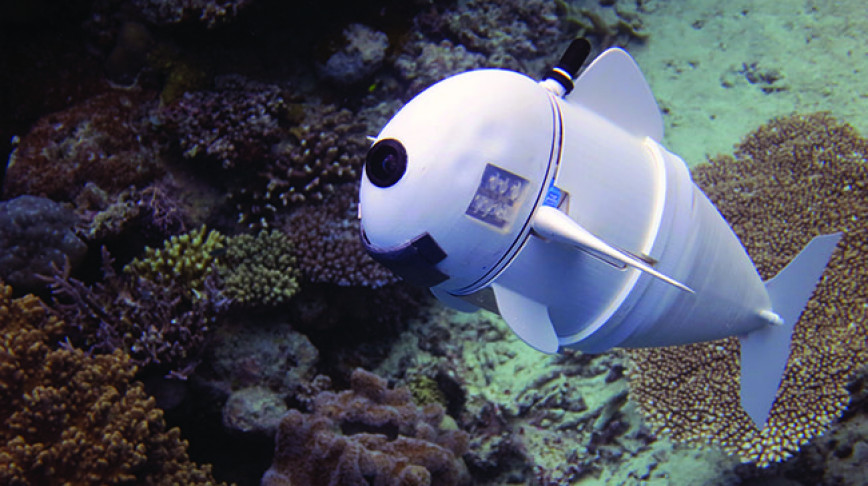

Earth’s oceans are having a rough go of it these days. On top of being the repository for millions of tons of plastic waste , global warming is affecting the oceans and upsetting …

Would you pray to a Robot deity? A group of Japanese buddhists is already doing so. Meet Mindar , the robot divinity shaped after the buddhist Goddess of Mercy, also known as …

Like it or loathe it, the robot revolution is now well underway and the futures described by writers such as Isaac Asimov , Frederik Pohl and Philip K. Dick are fast turning from …

Make no mistake, you're not looking at the latest Barbie line: These are the The Pussycat Dolls. Formerly an LA stripper show burlesque show, now upgraded to be pop music …

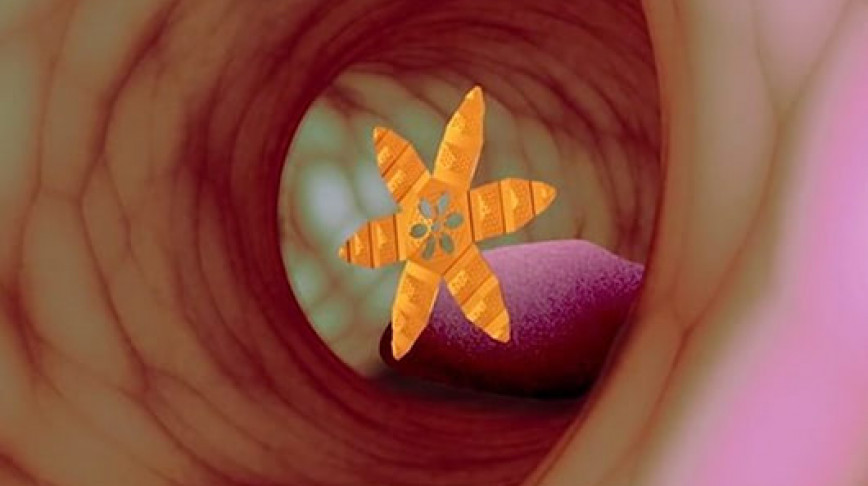

With recent successful experiments, we may see doctors switching from the single forceps to hordes of so-called microgrippers.

A report on the Empowered by Robots conference during DDW16, demonstrating fruitful collaboration between humans and robots.

Speculative designer Agi Haines' work focuses on (re)designing the human body, and speculates upon future scenarios.

It should come as no surprise that artificial intelligence naturally extends into the way we work. Let's look at how AI changes the way we relate to work.

A large-scale asian food market serving in vitro meat, a transatlantic expo on the future of nature and a travelling exhibition that explores our relationship with AI. These five …

Current social distancing measures are all about keeping a physical distance from each other in order to flatten the curve. But this does not mean we have to keep a distance from …

The deepest regions of the oceans still remain one of the least explored areas on Earth, despite their considerable scientific interest and the richness of lifeforms inhabiting …

The first essay ever written on Next Nature, published in Next Nature Pocket and in Entry Paradise, New Design Worlds . ( download pdf ) (German version: Erkundungen im Nächste …

Die Natur verändert sich mit uns (English version: Exploring Next Nature ) by Koert van Mensvoort , published in Entry Paradise, Neue Welten des Designs, Gerhard Seltman, Werner …

Smile! We are your friends. Robots will play an important role in our future society. We need to ensure that robots are socially compatible with us in order for society to accept …

Blinkybugs are small, simple, electromechanical bugs that respond to stimulus such as movement, vibrations, or air currents. They are constructed by hand from LEDs, wire, and …

Henk Rozema displaying his invention (2006), the digital tombstone "Digizerk". It was only a question of time that global digitalization would be introduced on cemeteries. The …

Just like centipedes OLE can roll up into a ball when danger threatens and retract its six legs. Its heat-resistant shell is made of a ceramic-fibre compound which can withstand …

Erich Berger has a 96 year old grandmother who is a cyborg. She features dentures, hearing aids, glasses, pace maker, a metal implant on the pinkie toe, and more. Quotes from the …

A bug that does the cleaning? This 1-meter (39-inch) tall, 1.35-meter (53-inch) long prototype robot ' named "Lady Bird" ' is designed to clean public restrooms at highway rest …

Robotarium X , the first zoo for artificial life, approaches robots very much in the way as we are used to look at life in old nature. We, humans, enjoy watching and studying …

Tickle is a small robot that walks on the human body to generate a pleasant, tickling sensation.It has two motors and rubber feet for a good grip on the skin. When it encounters a …

Murata Boy, is a robot capable of riding bicycles unassisted. Balancing, pedaling and directing the bicyclee, turning the bike to avoid obstacles, etc. And this is his nephew, …

Famous technologist and futurist Ray Kurzweil states: it's going down by 2029, so be prepared to get digital on entirely new levels. According to Kurzweil, machines will have both …

Shot taken before the robot in question short-circuited and started to kill all humans in sight.

An industrial robot has been given an unusual production task; writing out the full Martin Luther bible "by hand" in a calligraphic style. The robot arm appears to be a fairly …

With its 50 cm wing span and 80 grams of weight, this high tech bird is ready to infiltrate swift surveillance squads next summer. Aerospace engineering students at TU Delft , …

In 1921 the word "Robot" (meaning "labor") was introduced by Czech writer Karel ?apek . In his play "R.U.R." (Rossum's Universal Robots), robots seem happy to work for humans. …

The struggle between the born and the made is being fought out in a wardrobe on Saturn. By: 1stavemachine.com | Related: Sixties Last

"Nature adapts, even to human actions that seem to destroy everything. The amazing power of evolution has given birth to a new species of insect. Their ideal habitats are old …

The 1966 science-fiction movie Fantastic Voyage famously imagined using a tiny ship to combat disease inside the body. With the advent of nanotechnology, researchers are inching …

This new 3D webcam with two cameras spaced approximately as far apart as human eyes is our anthropomorphic object of the week. Can you feel it, watching you? The little brother …

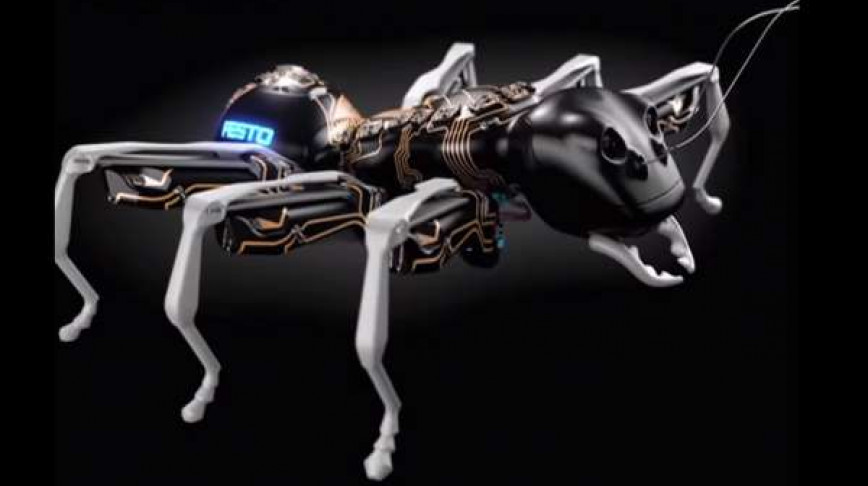

Festo Bionic Learning Lab demonstrates their new technologies inspired by nature. Another step towards artificial pets replacing extincted animals, or just an exposé of scientific …

Unlike many people fear that computers will overtake humans, Ray Kurzweil states that robots will merge with humans, robots the size of cells which can do the job way more …

Scientists at the University of California created a neural implant for a beetle that gives them wireless control over the insect. Electrical signals delivered via the electrodes …

Robots are penetrating our homes... disguised as cats? On July 30, Sega Toys releases an el-cheapo simulation cat toy for robot cat lovers with allergies or pet-unfriendly …

Let the robots do the dirty work! This real-life Wall-E Recycling robot, part of the $3.9 million DustBot research program that is trying to improve urban hygiene, collects trash …

We are anxiously waiting for the robot that makes the sushi, but at Squse , they have created the hand that can carefully place the delicacies in a box without crushing them. …

Quote Eric Horvitz : "After finishing my doctoral work, I returned to Stanford Medical School to finish up the MD part of my MD/PhD. During one of my last clinical rotations, I …

Sind wir noch zu retten? That was the slogan of this year’s Ars Electronica festival in Linz (Austria). Titled ‘REPAIR’, the media art festival urged to leave our scepticism and …

Ever wondered why there is so much competition in the world of operating systems? This video made by Leon Wang illustrates that "old nature" mechanisms like survival of the …

Kasey McMahon and Derek Doublin demonstrate the tension between people and their environment through the eyes of its non-human inhabitants. Beware of the Virtual Squirrels ! The …

Are you familiar with the affliction? Anthropomorphobia is the fear of recognizing human characteristics in non-human objects. The term is a hybrid of two Greek-derived words: …

Protei is a sailing robot that's designed to clean up oil spills without human assistance. After sailing upwind, the bot drifts downwind, zigg-zagging across the surface to absorb …

Steven Levy writes in Wired on the unexpected turn of the Artificial Intelligence revolution: rather than whole artificial minds, it consists of a rich bestiary of digital fauna, …

In 2009 the Initiative for Science, Society and Policy coined the phrase ‘living technology’ [1] to draw attention to a group of emerging technologies that are useful because they …

"A robot is a mechanical apparatus designed to do the work of a man. Its components are usually electro-mechanical and are guided by a computer program or electronic circuitry. By …

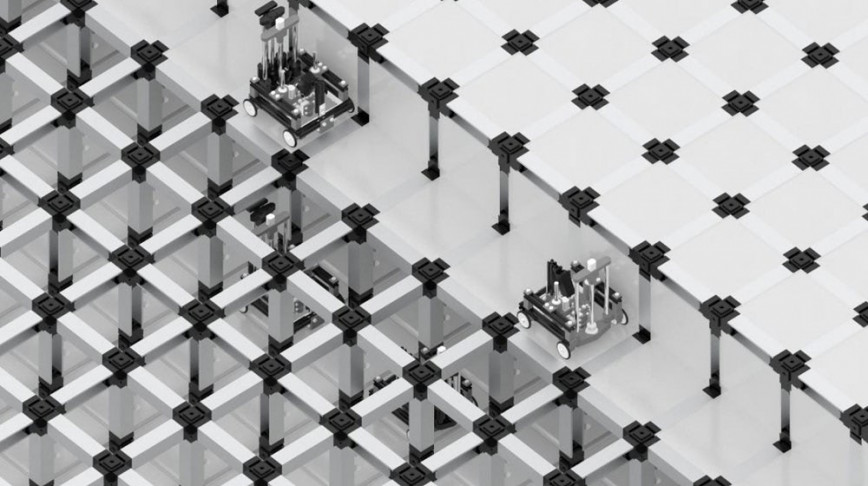

The future of farming is not to be found in further mass-industrialization nor in the return to traditional farming with man and horse power, but rather in swarms of smart, cheap robotic farmers that patiently seed, tend and harvest fields one plant at a time without the need for damaging pesticides.

Though disgusting, sewage is an abundant, nutrient-rich resource. Researchers at the University of West England have taken advantage of this fact by creating a robot that turns …

The Senseless Drawing Robot is a painting robot that interprets nearby street and sidewalk traffic and turns it into sprays of pigment. The robot is turning chaos into order, and …

There's a new threat to the world's unemployed. Researchers at Carnegie Mellon University have developed a robot that helps to organize shop inventories, making that trip to the …

For past entries and an introduction to the 11 Golden Rules of Anthropomorphism and Design, click here. People expect many things from each other: Expect them to say hi in the …

There are many people in the world for whom, for various reasons, it is impossible to leave the house or hospital. This means these people are not able to go to work or school. …

During the late 1930’s the philosopher Walter Benjamin wrote its widely influential essay ‘The work of Art in the Age of Its Technical Reproducibility’. While describing a general …

An showcase of what we are currently capable of installing in human beings.

A new kind of piezotronic transistor mesh could make for robotic skin that’s as sensitive as your own is, covered in thousands of tiny mechanical hairs.

Kate Darling argues that society needs to update its ethical landscape to include thinking machines.

A Chinese inventor created a $24,500 robot from junkyard scraps.

Robots use swarm intelligence to rebuild coral reefs.

Human test subjects felt empathy for dinosaur robots being cuddled or abused.

LifeHand 2: a prosthetic hand that infers the ability to feel rudimentary shapes and forms by touch.

Robots are coming to replace humans at work, are they a real threat to the world’s unemployed?

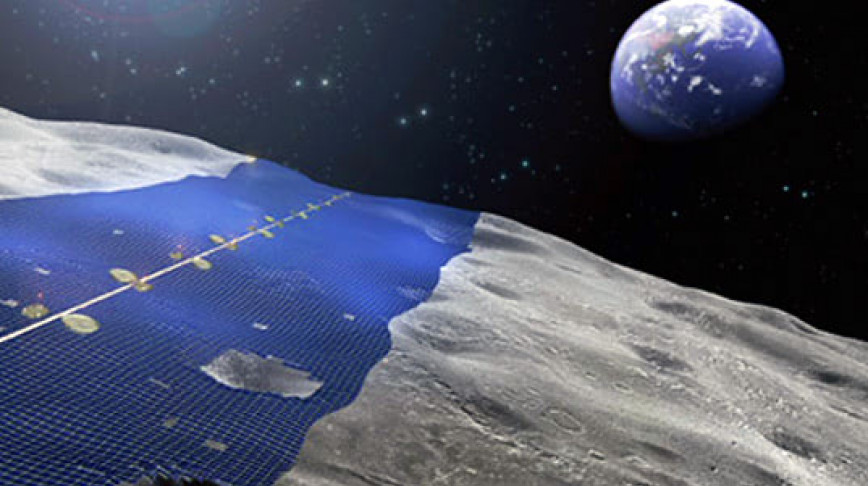

Japanese engineers plan to turn the moon into a giant solar panel station.

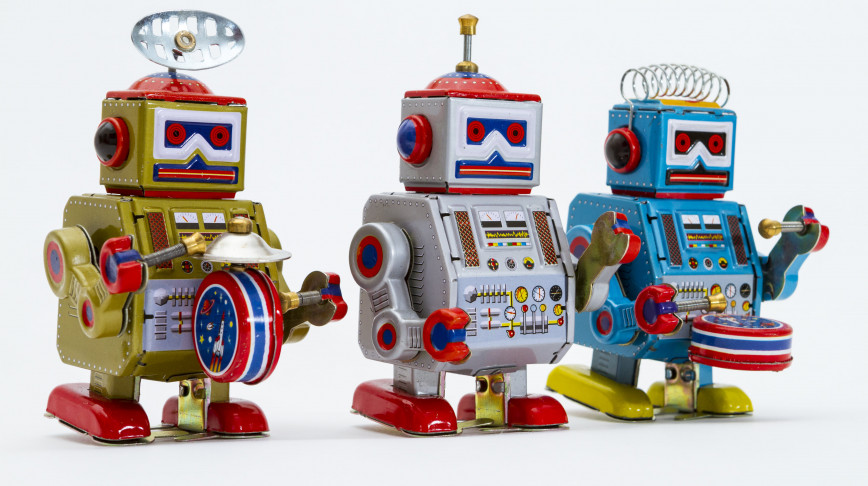

The '50s robot band, Le Trio Fantastique, is exemplary of human distant longing for technology that integrates with our body and senses, to the point of taking our place!

Researchers created a robo-feline able to run and bound while completely untethered.

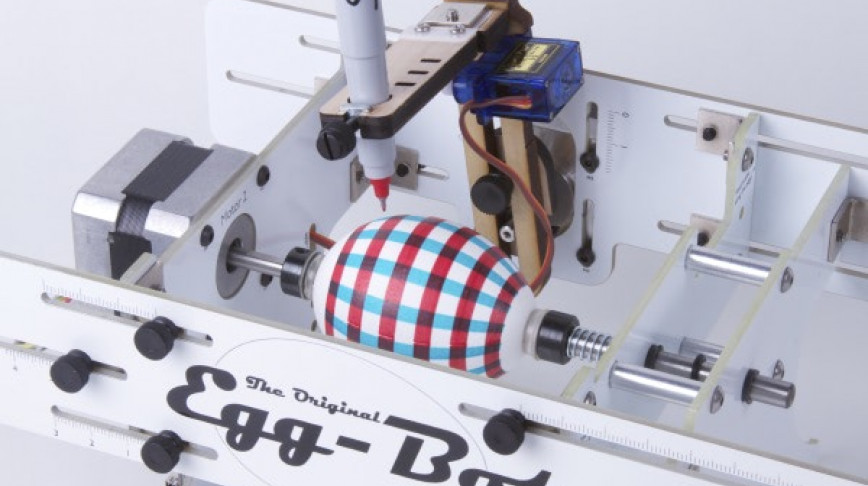

A robot that applies computer controlled motion to precisely decorate your Easter eggs.

Science Fiction taught us to think of robots as human-like beings, yet the robots that actually make it into your home are more likely to look like furniture.

Social roboticist Heather Knight explains why we should give robots personalities.

Researchers from UC San Diego announced that they have developed 3D print tiny microrobots in the shape of fish able to detect and remove toxin from liquid.

Although there seems to be no effective drug in use to cure malaria, a scientist robot named Eve may have found a cure.

These robotic ants by Festo are learning to work together, like real ants do.

Robots can already read, talk and reason. Yet, they do not seem to have found limits to their artistic skills either. Meet DOUG_1, first the drawing robot.

How would you feel if robots inherited ethical complications of existence?

A musical robot able to improvise a jazz solo in response to an actual person performing jazz.

Starship, a robot that will remodel our local deliveries system.

With its 656 beds, this hospital offers unconventional and leading computerized assistance for all sorts of patients.

What should I wear today? The answer to one of life’s big questions could came from an algorithm created to solve all your fashion problems.

The SAVE THE HUMANS! book is now available on our web shop.

Dom Indoors , is the latest research project developed by a construction robotics company called Asmbld . It includes a robotic system that can reconfigure an indoor space within …

The Random Darknet Shopper, with bitcoin to burn, has purchased counterfeit jeans, master keys, dodgy cigs and even a bag of ecstasy tablets. Who is legally liable?

The National University of Singapore released a group of robot swans in the Pandan Reservoir to swim around and keep an eye on water quality.

It seems thinking machines could put the human race in danger. How realistic these fears are?

Stephen Hawking gives his opinion on what technological unemployment, aka machines taking over our jobs, can represent for future human societies.

During the event The Biosphere Code, Stockholm University researcher Victor Galaz and colleagues outlined a manifesto for algorithms in the environment.

A futuristic Japanese hotel will be run by robots, designed to be extremely human-like.

The world’s largest indoor farm in Japan is 100 times more productive than traditional agriculture.

Scientists were inspired by nature to developed a method to shape-shift 4D-printed structures that could one day help heal wounds and be used in robotic surgical tools.

Pokémon Go is slowly taking over our planet. People know more Pokémon than bird or tree species. However, avid players will have noticed that some of the first-gen Pokémon are not available in the game yet.

The first self “driving” boat will be entering the canals of Amsterdam in 2017 with versatile ends.

Read Nicholas Carr's essay on the transhumanist dream of having wings.

There is an artificial intelligent robot called ROSS that is taking over the work of thousands of lawyers. Working non-stop, reading faster then any human attorney can do.

While robots are becoming more and more human, with all their sensors and information processing abilities, may it be likely that they too could develop mental illnesses?

Nissan created the e-NV200 workspace: an electrical van with zero emissions that is packed with everything you need to work efficiently.

Watch this robot solving the Rubik's Cube in 1.047 second.

FarmBot Genesis is humanity's first open-source CNC farming machine designed for at-home automated food production.

An industrial robot just tattooed the first person ever in San Francisco.

Meet Amelia, the first robot to work along with human coworkers in a council job.

A new wave of automation lies in pizza. Not only will robots deliver your pizzas in the future, they will even make them.

The first time you switch on your self-driving car, punch in your coordinates and cruise off to the soulless thrum of an electric motor you’ve got to wonder: does this thing know what it’s doing?

The elderly may toss their walkers for this robotic suit.

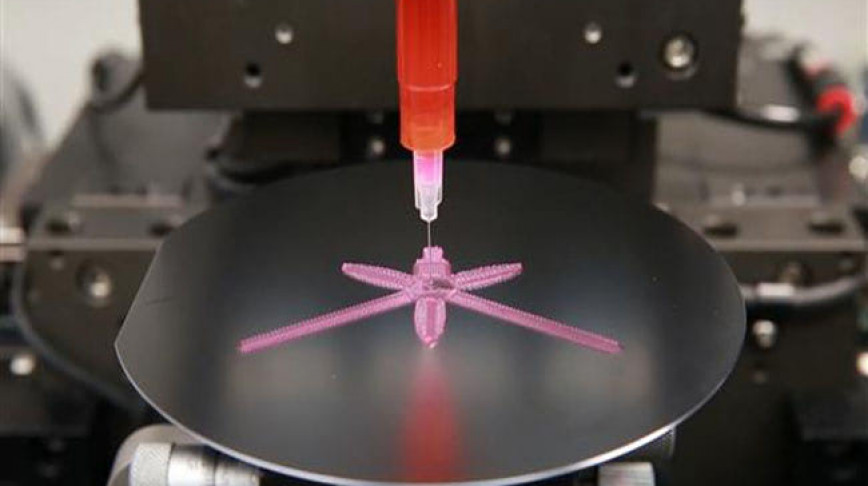

Researches at the University of Illinois released a step by step guide to build 3D printed bio robots with living muscles.

A needle free oral delivery system might in the future dissolve the old way of vacancies injected by syringes.

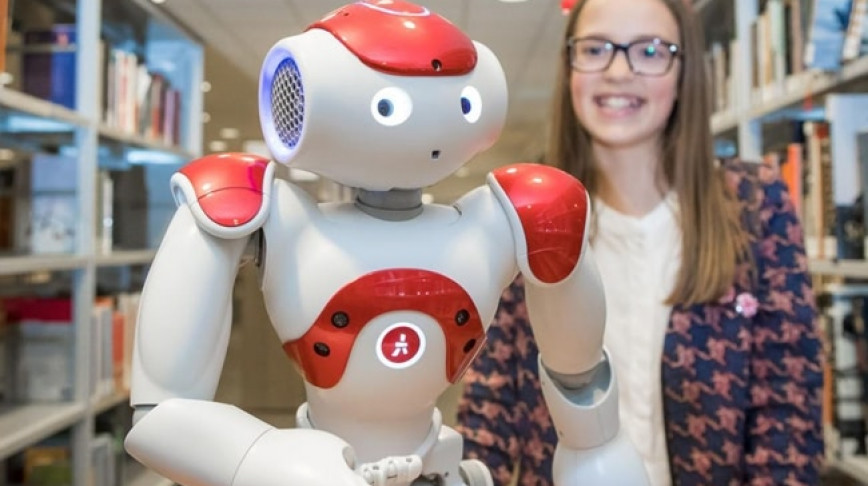

The Dutch province Gelderland-South uses two educational robots to increase kids' knowledge on such a technology.

A Japanese Start up brings Farmville back into real life by letting players grow physical food.

This is post number three of our serie 'Robots at Work'. In this episode we present you five jobs for facilitators, the ones who love to work with technology.

This is post number four of our serie 'Robots at Work'. In this episode we present you five jobs for entrepreneurs, the ones who love doing big business.

This is post number five of our serie 'Robots at Work'. In this last episode we present you five jobs for the extraordinary, the ones who won't let themselves be categorized.

Drone made to rescue in bad weather condition in open waters.

A rosy-cheeked kid learns her first words, cries when her babysitter leaves, smiles when she’s happy, but she’s not real. BabyX is an AI research-in-progress by a company named Soul Machines.

As if stand-alone technologies weren’t advancing fast enough, we’re in age where we must study the intersection points of these technologies. How is what’s happening in robotics …

Scientists believe the introduction of a hormone-like system, such as the one found in the human brain, could give AI the ability to reason and make decisions like people do. …

Agriculture may be one of the oldest of our technologies. Over time it has developed, changed, revolutionized, industrialized - or simply put, it has evolved . Today’s farms are …

If you teach a robot to fish, it’ll probably catch fish. However, if you teach it to be curious, it’ll just watch TV and play video games all day. Researchers from Open AI …

Sex is one of the most powerful, fundamental human drives. It’s caused wars, and built and destroyed kingdoms. It occupies a significant percentage of most people’s thoughts. As …

Stick-on shoes, wakeup lights, bionic limbs: these are examples of humane technology. But what exactly does this mean? It can best be explained in contrast with its opposite. …

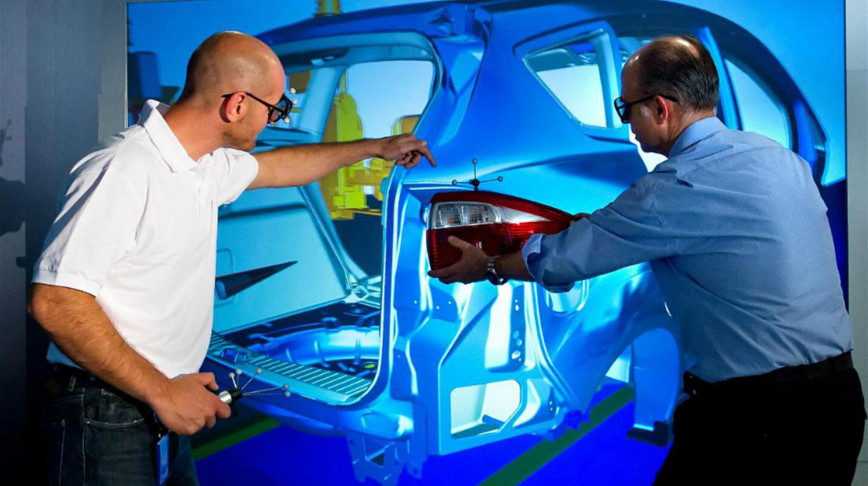

Robots are coming for our jobs. Virtual reality is coming to make the jobs that remain easier to accomplish. All of the world’s manufacturing sectors are in the process of …

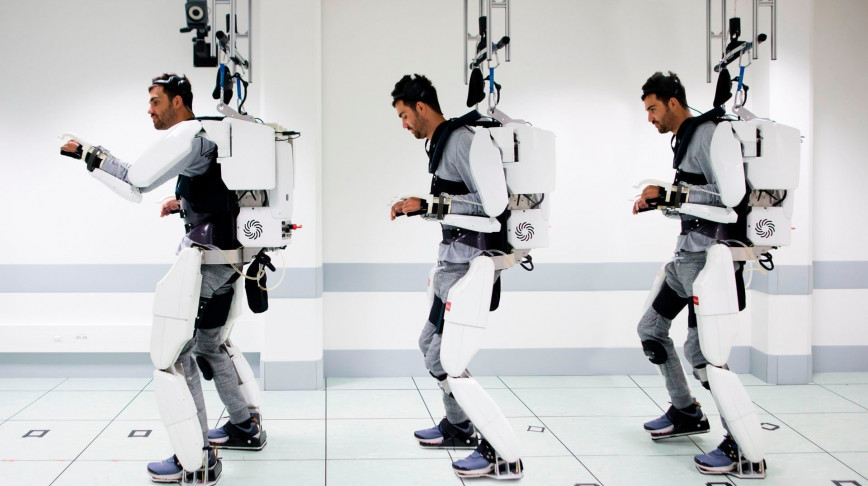

A breakthrough technology that responds to signals from the brain has transformed the life of a paralyzed 28-year-old man called Thibault. Four years after the initial incident …

At just a millimeter wide, Xenobots are “neither a traditional robot nor a known species of animal", they are "a new class of artifact: a living, programmable organism”, says …

Biosensors, cultivated meat and spider’s silk. For synthetic biologist and Next Nature ambassador Nadine Bongaerts, these are all advances towards a new world, where polluting …

The 14 Golden Rules of HUBOT are rules of conduct which must be followed and which everyone needs to hold each other to account. Themes like respect, teamwork, creativity, and …

We are now so used to communicating with some robotic device in our homes. But this skill cost Furbies a national ban in the US.

A team of researchers are currently investigating the fusion of human brain cells with artificial intelligence. Creepy or innovative?

Seven examples of how design will help us built the farm of tomorrow.